https://youtu.be/aywZrzNaKjs?feature=shared

LangChain Explained in 13 Minutes _ QuickStart Tutorial for Beginners

langchain , what is it ?

why should you use it ?

and how does it work ?

let's have a look .

langchain is an open source framework that allows developers working with ai to combine large language models like gpt-4 with external sources of computation and data .

the framework is currently offered as a python or javascript package , typescript to be specific .

in this video we're going to start unpacking the python framework and we're going to see why the popularity of the framework is exploding right now , especially after the introduction of gpt-4 in march 2023 .

to understand what need lang chain fills , let's have a look at a practical example .

so by now we all know that chat gpt or gpt-4 has an impressive general knowledge .

we can ask it about almost anything and we'll get a pretty good answer .

suppose you want to know something specifically from your own data , your own document .

it could be a book , a pdf file , a database with proprietary information .

langchain allows you to connect a large language model like gpt-4 to your own sources of data .

and we're not talking about pasting a snippet of a text document into the chatgpt prompt .

we're talking about referencing an entire database filled with your own data .

and not only that , once you get the information you need , you can have lang chain help you take the action you want to take .

for instance , send an email with some specific information .

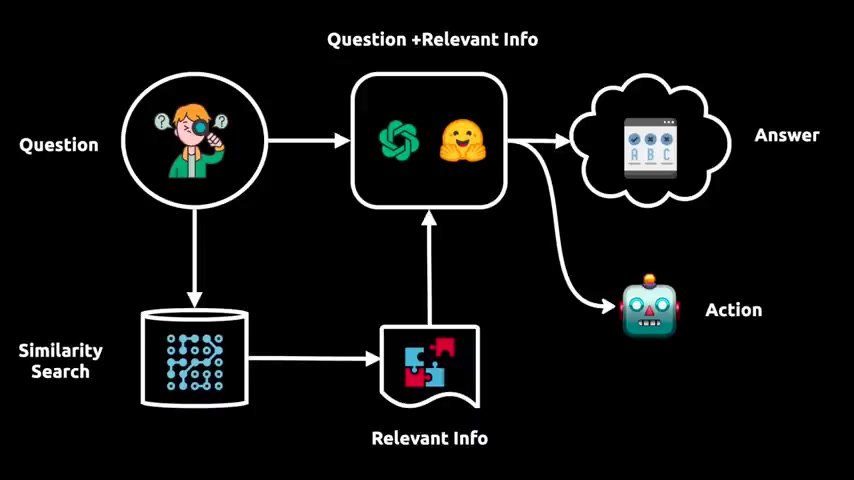

and the way you do that is by taking the document you want your language model to reference and then you slice it up into smaller chunks and you store those chunks in a vector database .

the chunks are stored as embeddings , meaning they are vector representations of the text .

this allows you to build language model applications that follow a general pipeline .

a user asks an initial question .

this question is then sent to the language model and a vector representation of that question is used to do a similarity search in the vector database .

this allows us to fetch the relevant chunks of information from the vector database and feed that to the language model as well .

now the language model has both the initial question and the relevant information from the victor database , and is therefore capable of providing an answer or taking action .

langchain helps build applications that follow a pipeline like this .

these applications are both data aware , we can reference our own data in a victor store , and they are agentic .

they can take actions and not only provide answers to questions .

and these two capabilities open up for an infinite number of practical use cases .

anything involving personal assistance will be huge .

you can have a large language model , book flights , transfer money , pay taxes .

now imagine the implications for studying and learning new things .

you can have a large language model , reference an entire syllabus , and help you learn the material as fast as possible .

coding , data analysis , data science , it's all going to be affected by this .

one of the applications that i'm most excited about is the ability to connect large language models to existing company data , such as customer data , marketing data , and so on .

i think we're going to see an exponential progress in data analytics and data science , our ability to connect the large language models to advanced apis , such as meta's api or google's api is really going to make things take off .

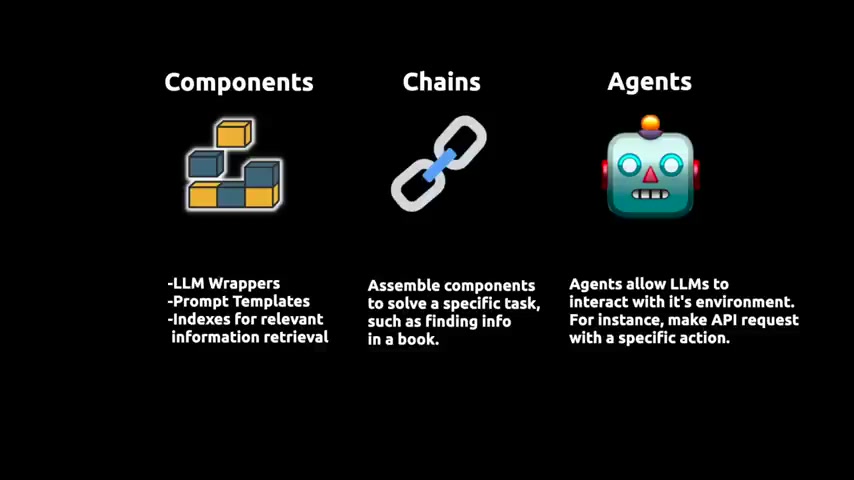

so the main value proposition of langchain can be divided into three main concepts .

we have the llm wrappers that allows us to connect to large language models like gpt-4 or the ones from hockingface .

prompt templates allows us to avoid having to hardcode text , which is the input to the llms .

then we have indexes that allows us to extract relevant information for the llms .

the chains allows us to combine multiple components together to solve a specific task and build an entire llm application .

and finally , we have the agents that allow the llm to interact with external apis .

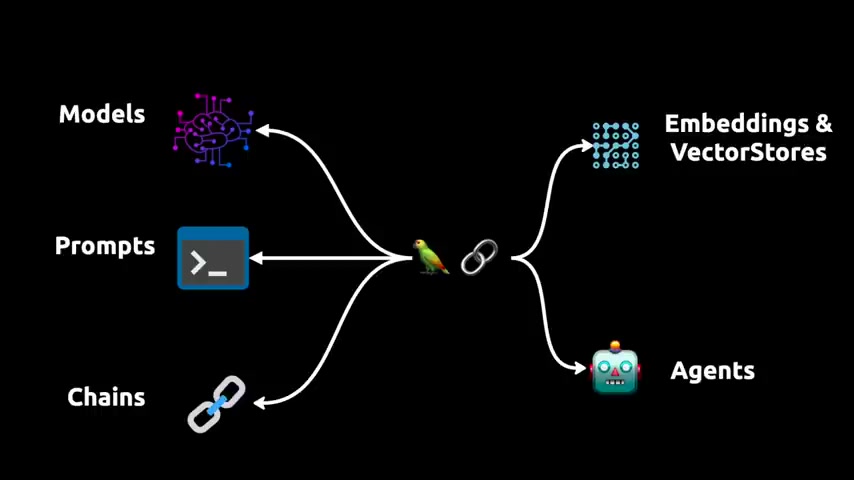

there's a lot to unpack in lang chain and new stuff is being added every day , but on a high level , this is what the framework looks like .

we have models or wrappers around models .

we have prompts , we have chains , we have the embeddings and vector stores , which are the indexes , and then we have the agents .

so what i'm gonna do now is i'm gonna start unpacking each of these elements by writing code .

and in this video , i'm gonna keep it high level just to get an overview of the framework and a feel for the different elements .

first thing we're going to do is we're going to pip install three libraries .

we're going to need python.ent to manage the environment file with the passwords .

we're going to install lang chain and we're going to install the pine cone client .

pine cone is going to be the vector store we're going to be using in this video .

in the environment file , we need the openai api key .

we need the pinecone environment , and we need the pinecone api key .

once you have signed up for a pinecone account , it's free .

the api keys and the environment name is easy to find .

same thing is true for openai .

just go to platform.openai.com slash account slash api keys .

let's get started .

so , when you have the keys in an environment file , all you have to do is use load.in and find.in to get the keys .

now we're ready to go .

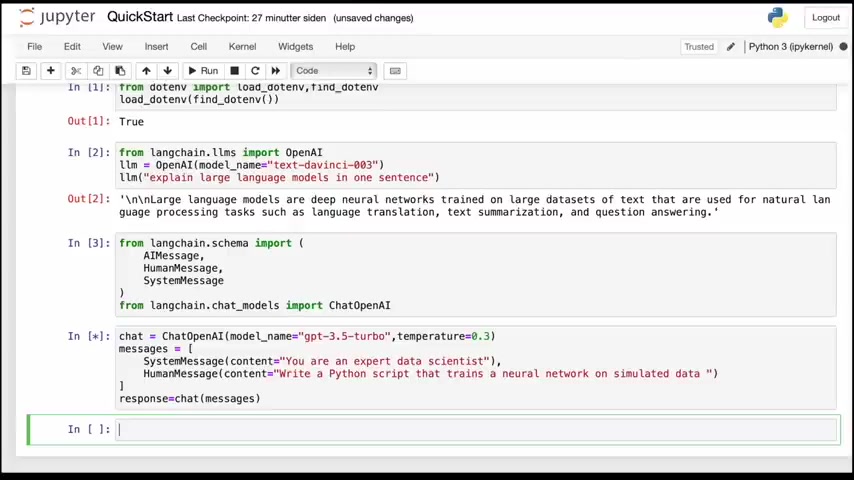

so we're going to start off with the llms or the wrappers around the llms .

then i'm going to import the openai wrapper and i'm going to instantiate the textavenger003 completion model and ask it to explain what a large language model is .

and this is very similar to when you call the openai api directly .

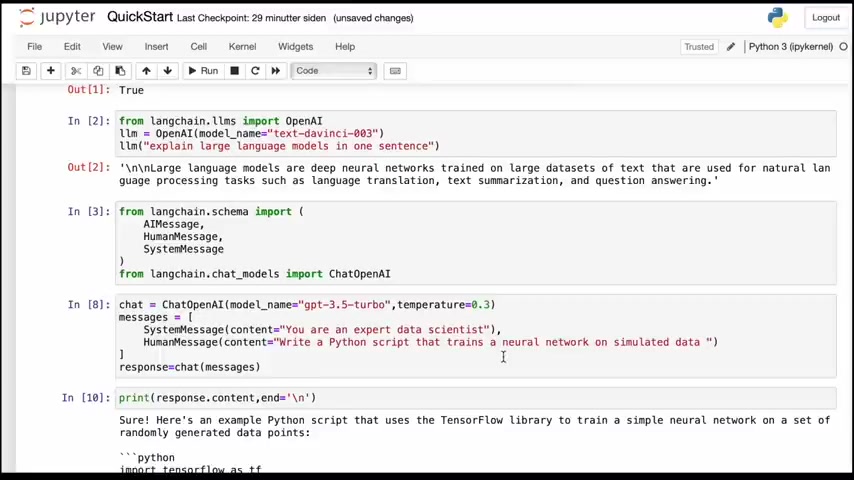

next , we're going to move over to the chat model .

so gpt 3.5 and gpt 4 are chat models .

and in order to interact with the chat model through langchain , we're going to import a schema consisting of three parts , an ai message , a human message , and a system message .

and then we're going to import chat openai .

the system message is what you use to configure the system when you use a model , and the human message is the user message .

to use the chat model , you combine the system message and the human message in a list , and then you use that as an input to the chat model .

here i'm using gpt 3.5 turbo .

you could have used gpt 4 .

i'm not using that because the openai service is a little bit limited at the moment .

so this works , no problem .

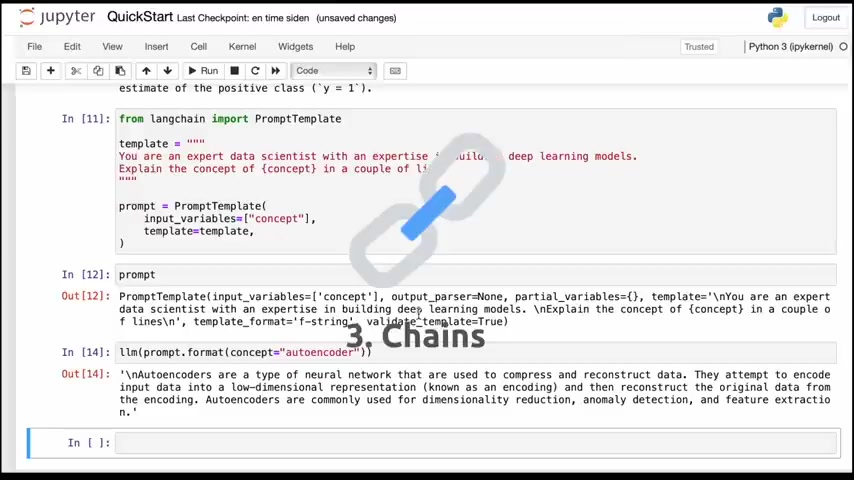

let's move to the next concept , which is prompt templates .

so prompts are what we are going to send to our language model .

but most of the time these prompts are not going to be static , they're going to be dynamic , they're going to be used in an application .

and to do that , langchain has something called prompt templates .

and what that allows us to do is take a piece of text and inject a user input into that text .

and we can then format the prompt with the user input .

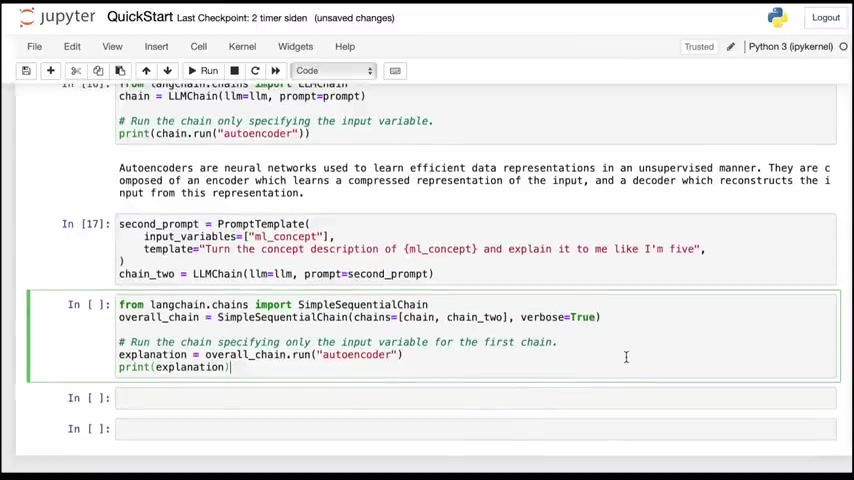

the third concept we want to look at is the concept of a chain .

a chain takes a language model and a prompt template and combines them into an interface that takes an input from the user and outputs an answer from the language model .

sort of like a composite function where the inner function is the prompt template and the outer function is the language model .

we can also build sequential chains where we have one chain returning an output and then a second chain taking the output from the first chain as an input .

so here we have the first chain that takes a machine learning concept and gives us a brief explanation of that concept .

the second chain then takes the description of the first concept and explains it to me like i'm five years old .

then we simply combine the two chains , the first chain called chain and then the second chain called chain two into an overall chain and run that chain .

and we see that the overall chain returns both the first description of the concept and the explain it to me like i'm five explanation of the concept .

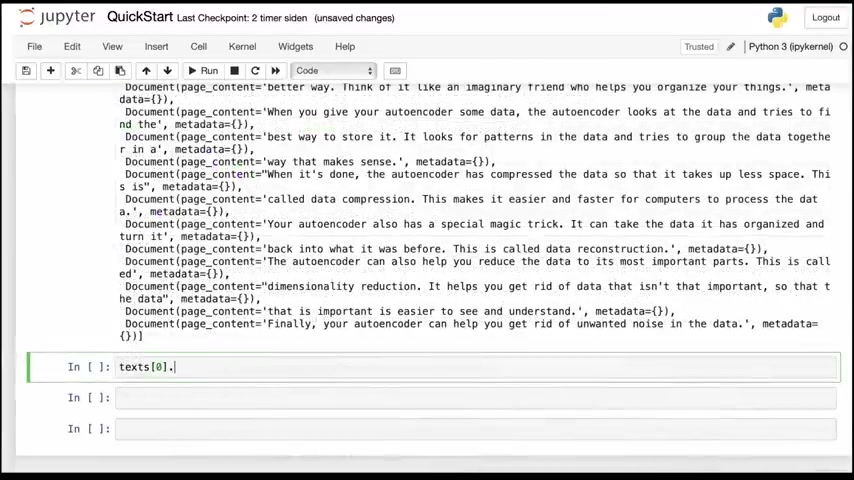

all right , let's move on to embeddings and vector stores .

but before we do that , let me just change the , explain it to me like i'm five prompts so that we get a few more words .

gonna go with 500 words .

all right , so this is a slightly longer explanation for a five-year-old .

now what i'm going to do is i'm going to take this text and i'm going to split it into chunks because we want to store it in a vector store in pinecone .

and langtian has a textsplitter tool for that .

so i'm going to import recursivecharactertextsplitter and then i'm going to split the text into chunks like we talked about in the beginning of the video .

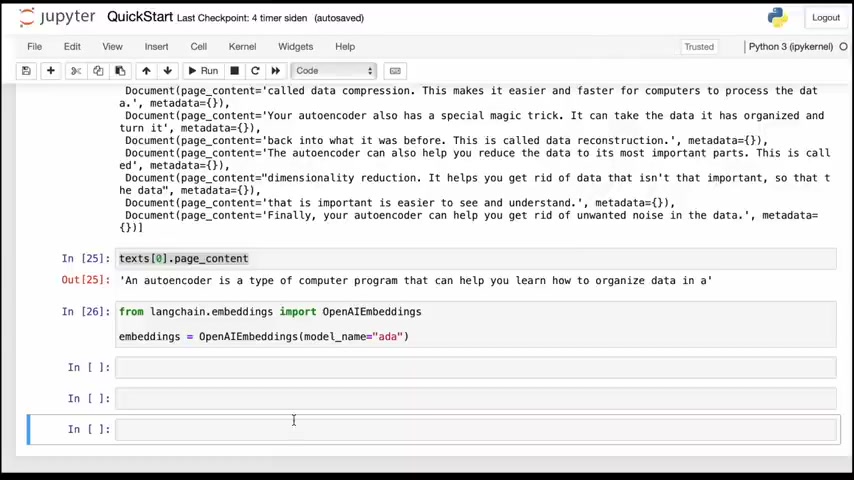

we can extract the plain text of the individual elements of the list with page content .

and what we want to do now is we want to turn this into an embedding , which is just a vector representation of this text .

and we can use openai's embedding model , ada .

with openai's model , we can call embed query on the raw text that we just extracted from the chunks of the document and then we get the vector representation of that text or the embedding .

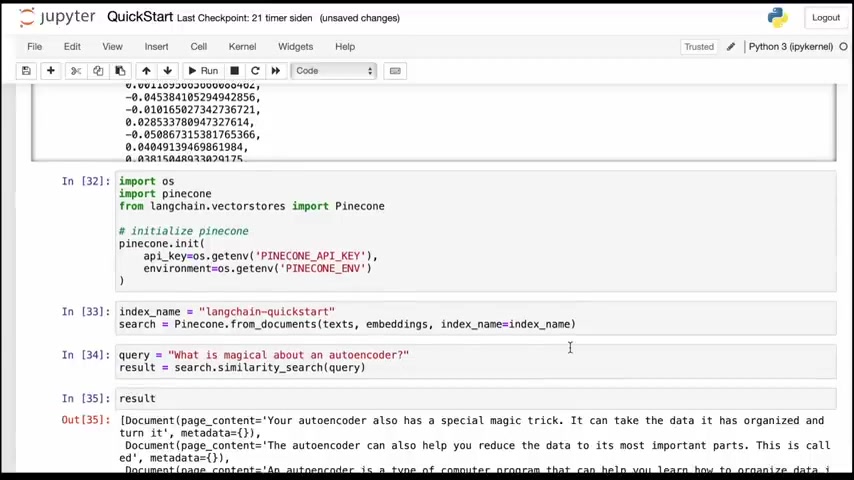

now we're going to check the chunks of the explanation document and we're going to store the vector representations in pinecone .

so we'll import the pinecone python client and we'll import pinecone from lang chain vector stores and we initiate the pinecone client with the key and the environment that we have in the environment file .

then we take the variable texts , which consists of all the chunks of data we wanna store .

we take the embeddings bottle and we take an index name and we load those chunks of the embeddings to pinecone .

and once we have the vector stored in pinecone , we can ask questions about the data stored , what is magical about an autoencoder , and then we can do a similarity search in pinecone to get the answer or to extract all the relevant chunks .

if we head over to pinecone , we can see that the index is here .

we can click on it and inspect it , check the index info , we have a total of 13 vectors in the vector store .

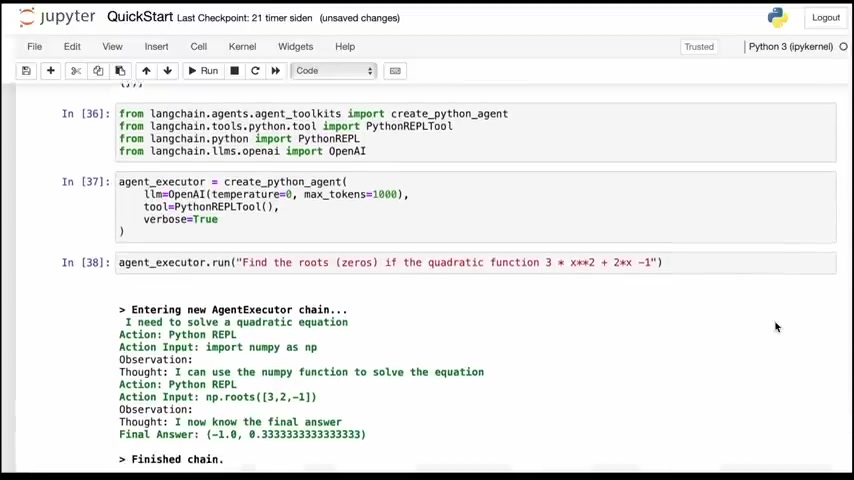

all right , so the last thing we're going to do is we're going to have a brief look at the concept of an agent .

now , if you head over to openai chat gpt plugins page , you can see that they're showcasing a python code interpreter .

now we can actually do something similar in lang chain .

so here i'm importing the create python agent as well as the python rappel tool and the python rappel from lang chain .

then we instantiate a python agent executor using an open ai language model .

and this allows us to having the language model run python code .

so here i want to find the roots of a quadratic function and we see that the agent executor is using numpy roots to find the roots of this quadratic function .

alright so this video was meant to give you a brief introduction to the core concepts of lang chain .

if you want to follow along for a deep dive into the concepts , hit subscribe .

thanks for watching .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.