Load from PC

Deeplearning

In lesson one , we'll be covering models , prompts and parsers .

So models refers to the language models underpinning a lot of it prompts refers to the style of creating inputs to pass into the models .

And then parsers is on the opposite end .

It's it involves taking the output of these models and parsing it into more structured format so that you can do things downstream with it .

So when you build a application using N L M , they'll often be reusable models .

We repeatedly prompt a model pauses , output .

And so laa gives an easy set of attractions to do this type of operation .

So with that , let's jump in and take a look at um models , prompts and pauses .

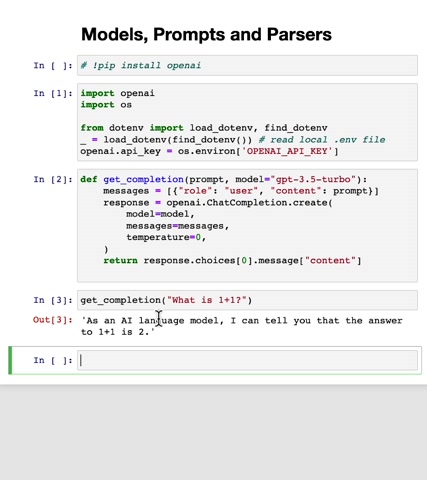

So to get started, here's a little bit of starter code .

I'm going to import OS import openAI and load my openAI secret key .

The openAI library is already installed in my Jupiter notebook environment .

So if you're running this locally and you don't have open A I installed yet , you might need to run that uh bank pip install opening I , but I'm not gonna do that here .

And then here's a hyper function .

This is actually um very similar to the helper function that you might have seen in the G T prompt engineer for developers school that offered together with uh open I forfeit .

And so with this helper function , you can say get completion on um what is one plus one .

And this will call G PC or technically the model GP T 3.5 terabyte to give you an answer back like this .

Now to motivate the land chain abstractions for model prompts and pauses .

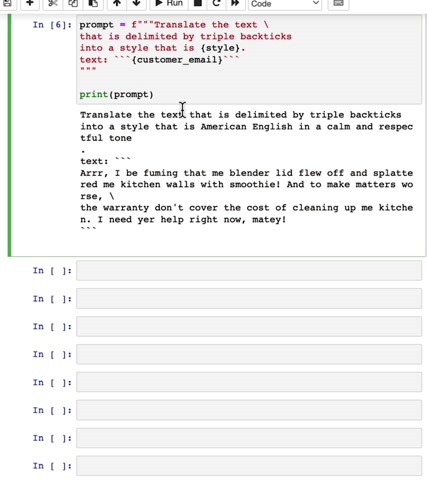

Um Let's say you get an email from a customer in a language other than English .

Um in order to make sure this is successful , the other language I'm going to use is the English pirate language .

When it comes says are I'd be fuming that we blend the lid flew off and slat in my kitchen walls with smoothie and to make matters worse , the warranty don't cover the cost of cleaning up my kitchen .

I need your help right now .

And so what we will do is ask this O M to translate the text to American English in a calm and respectful tone .

So I'm gonna set style to American English in a calm and respectful tone .

And so in order to actually accomplish this , if you've seen a little bit of prompting before , I'm going to specify the prompt using an F string with the instructions to translate the text that is delimited by triple backs into style that is style and then plug in these two styles .

And so this generates a prompt that says translate the text and so on .

I encourage you to pause the video and run the code and also try modifying the prompt to see if you can get a different output .

You can then um prompt the large language model to get a response .

Let's see what the response is .

So it translated the English pirates message into this very polite .

I'm really frustrated that my blender lid flew off and made a mess and my kitchen walls were smoothie and so on .

Um I could really use your help right now .

My friend .

That sounds very nice .

So if you have different customers writing reviews in different languages , not just English pirate , but French , German , Japanese and so on .

You can imagine having to generate a whole sequence of prompts to generate such translations .

Let's look at how we can do this in a more convenient way using LA chain .

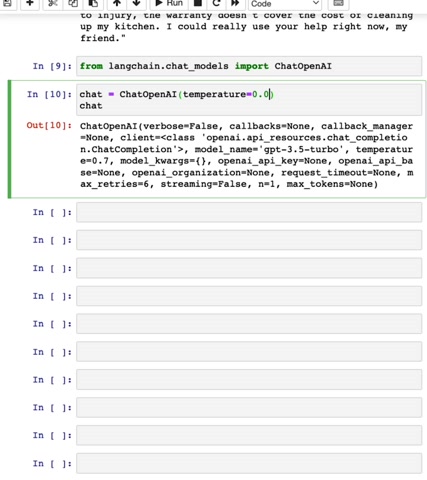

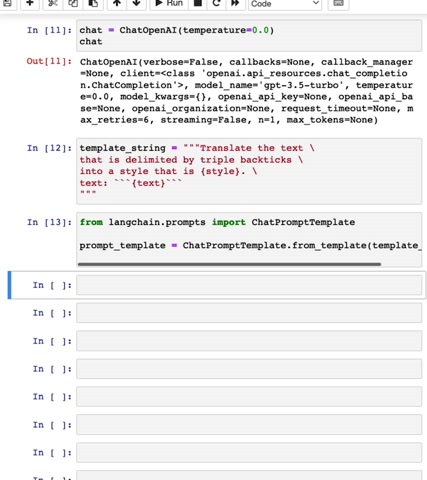

I'm going to import chat open A I .

This is line chains abstraction for the chat GP T api endpoint .

And so if I then set chat equals chat open A I and look like chatters , it creates this object um as follows that uses the chat GP T model which is also called GP T 3.5 turbo .

When I'm building applications , one thing I will often do is set the temperature parameter to be equal to zero So the default temperature is 0.7 .

But let me actually redo that with temperature equals oh point oh and now the temperature is set to zero to make his output a little bit less random .

And now let me define the template string as follows , translates the text delimited by triple Vatic into style that is style .

And then here's the text and to repeatedly reuse this template , let's import lach chain's chat prompt template .

And then let me create the prompt template using that template string that we just wrote above from the prompt template .

You can actually extract the arsenal prompt .

And it realizes that this prompt has two input variables , the style and the text which were um shown here with the curly braces .

And here is the original template as well that we had specified in fact to print this out .

Um It realizes it has two imper variables , style and text .

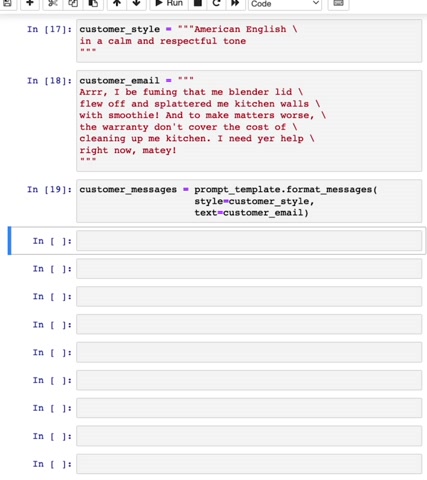

Now let's specify the style .

This is a style that I want the uh customer message to be translated to .

So I'm gonna call this customer style and uh here's my same customer email as before .

And now if I create custom messages , this would generate the prompt and we'll pass this a large language more than a minute to get a response .

So if you want to look at the types , the custom message is actually a list .

And um if you look at the first element of the list , this is more or less that prompts that you would expect this to be creating .

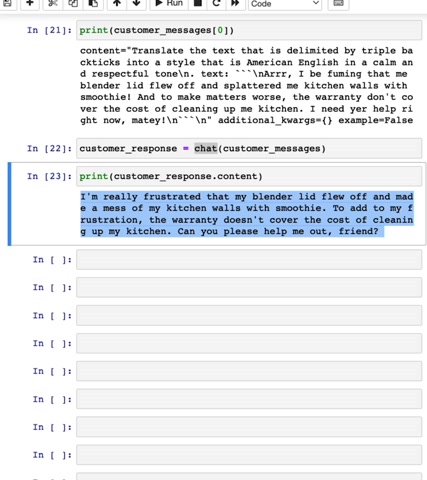

Lastly , this response prompt to the O M .

So I'm gonna call chat , which we had said earlier um as a reference to the opening I CHAT GP end point .

And if we print out the customer responses content , then it gives you back um this text translated from English pirate to polite American English .

And of course , you can imagine other use cases where the customer emails are in other languages .

And this tool can be used to translate the messages for an English speaking to understand and reply to , I encourage you to pause the video and run the code and also try modifying the prompt to see if you can get a different output .

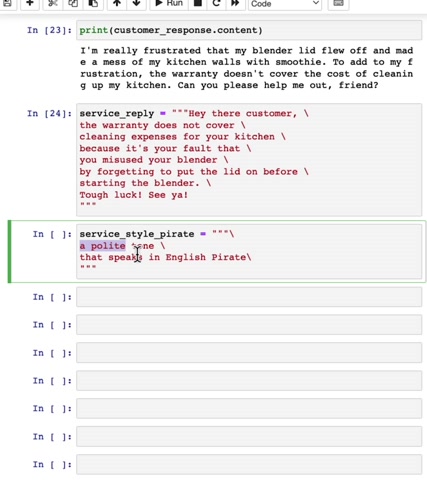

Now let's hope our customer service agent replied to the customer in their original language .

So let's say English speaking , customer service agent writes and say , hey there customer warranty does not cover clean expenses for your kitchen because it's your fault that you must use your blender by to put on the lid .

Tough luck , not a very polite message .

But um let's say this is what a customer service agent wants .

We are going to specify that the service message is going to be translated to this pirate style .

So we wanted it to be in a polite tone that speaks in English pirate .

And because we previously created that prompt template , the cool thing is we can now reuse that prompt template and specify that the output style we want is this service style pirate and the text is this service reply .

And if we do that , that's the prompt .

And if we prompt , um GP T this is a response .

It gives us back .

I must kindly inform you that the warranty be not covering the expenses or cleaning your galley and so on .

I tough luck .

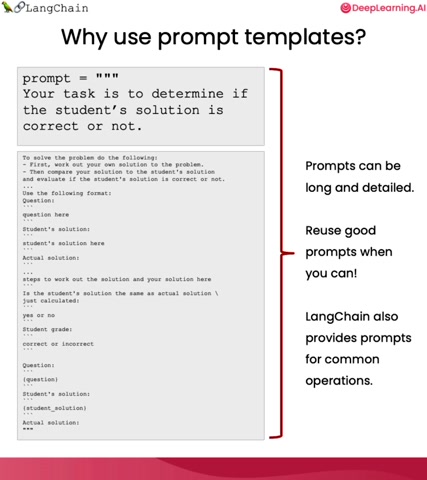

So you might be wondering why are we using prompt templates instead of , you know , just an F string ?

The answer is that as you build sophisticated applications , prompts can be quite long and detailed .

And so prompt templates are a useful abstraction to help you reuse good prompts when you can .

Um this is an example of a relatively long prompt to grade a student submission for online learning application and a prompt like this can be quite long in which you can ask the L M to first solve the problem and then have the output in a certain format and output in a certain format and wrapping this in a long chain prompt makes it easier to reuse a prompt like this .

Also , you see later that LA chain provides prompts for some common operations such as summarization or question answering or connecting to SQL databases or connecting to different API S .

And so by using some of line chains built in prompts , you can quickly get an application working without needing to um engineer your own prompts .

One other aspect of land chains prompt libraries is that it also supports output pausing , which we'll get to in a minute .

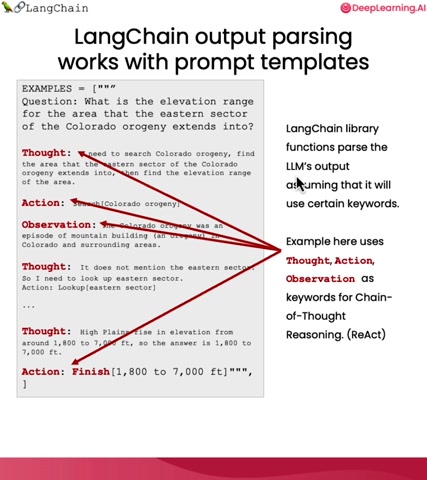

But when you're building a complex application , using an L M , you often instruct the L M to generate its output in a certain format such as using specific keywords .

This example on the left illustrates using an L O M to carry out something called chain of thought reasoning using a framework called the React framework .

But don't worry about the technical details .

But the keys of that is that the thought is what the L M is thinking because by giving an L M space to think it can often get to more accurate conclusions , then action as a keyword to carry the specific action and then observation to show what it learned from that action and so on .

And if you have a prompt that instructs the L M to use these specific keywords , thought action and observation , then this prompt can be coupled with a parser to extract out the text that has been tagged with these specific keywords .

And so that together gives a very nice abstraction to specify the input to an L M and then also have a parser correctly interpret the output that the L M gives .

And so with that , let's return to see an example of an output parser using chain .

In this example , let's take a look at how you can have an L M output .

Jason and use line chain to pause that output .

And the one example I'll use will be to extract information from a product review and format that output in adjacent format .

So here's an example of how you would like the output to be formatted .

Um Technically , this is a Python dictionary where whether or not the product is a gift ma falls .

The number of days it took deliverer was five and the price value was pretty affordable .

So this is one example of a desired output .

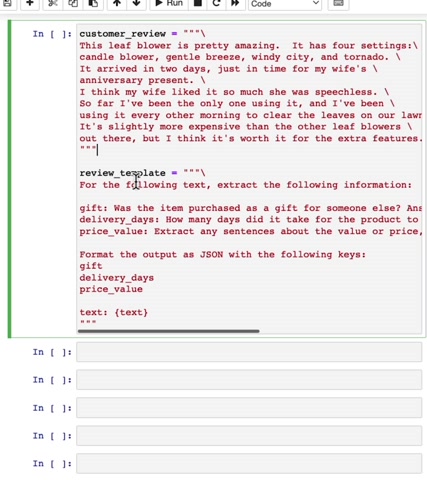

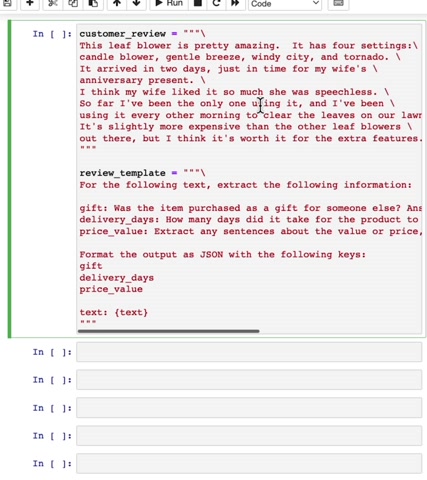

Here is an example of um customer review as well as a template to try to get to that Jason output .

So here's a customer review .

It says the sleep blow is pretty amazing .

It has four settings , candle blower , gentle breeze , windy city and tornado .

It arrived in two days just in time for my wife's anniversary present .

I think my wife likes it so much .

She was speechless so far .

I've been the only one using it and so on .

Um And here's a review template for the following text extracted .

Follow the information specified .

Was this a gift ?

So in this case , it would be yes , because this is a gift .

Um and also delivery days .

How long did it take to deliver ?

It looks like in this case , it arrived in two days and um what's the price value , you know , slightly more expensive on the leaf blowers and so on .

So the review template on C O M to take us input a customer review and extract these three fields and then format .

The output is Jason um with the following keys .

All right .

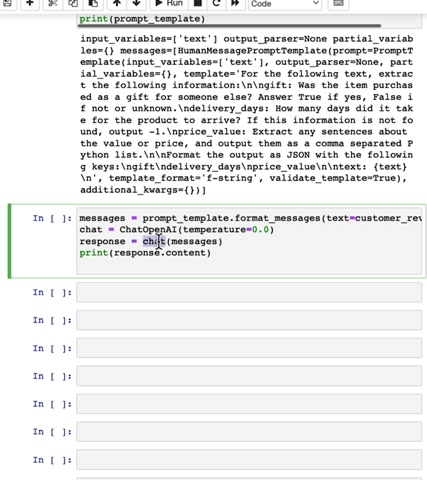

So here's how you can wrap this in that chain .

Let's import the chat prompt template .

We'd actually imported this already earlier .

So technically , this line is redundant , but I'll just import it the game and then have the prompt templates um created from the review template up on top .

And so here's the prompt template and now similar to our early usage of a prompt template .

Let's create the messages to pause to the opening eye , uh endpoint , create the open air end points , call that end point and then let's print out the response .

I encourage you to pause the video and run the code and there it is , it says gift is true .

Delivery days is two and the price value also looks pretty accurate .

Um But note that if we check the type of the response , this is actually a string .

So it looks like Jason and looks like his key value pairs , but it's actually not a dictionary .

This is just one long string .

So what I really like to do is go to the response content and get the value from the gift key , which should be true .

But I run this , this should generate an error because well , this is actually a string .

This is not the Python dictionary .

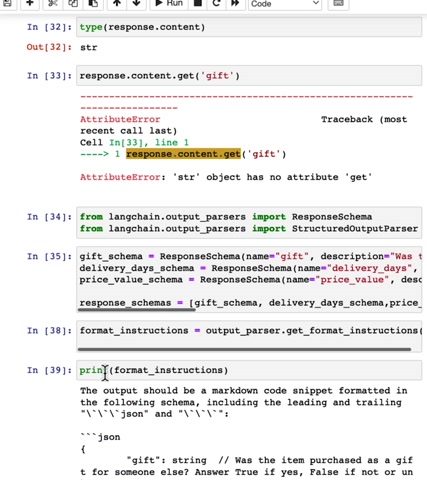

So let's see how we would use leins um parser in order to do this , I'm going to import response schemer and structured output paser from chain .

And um I'm going to tell it what I wanted to pause by specifying these response schema .

So the gifts schemer is named gift and here's the description was the item , purchase a gift for someone else .

Uh On the true of yes falls if not so unknown and so on .

So have a gift schemer delivery day schemer price value schemer and then let's put all three of them into a list as follows .

Now that I've specified the schema for these um lynching can actually give you the prompt itself uh by having the deposit , tell you what instructions it wants you to send to the L M .

So if I were to print format instructions , she has a pretty precise set instructions for the L M that will cause the degenerate outputs that the output parser can process .

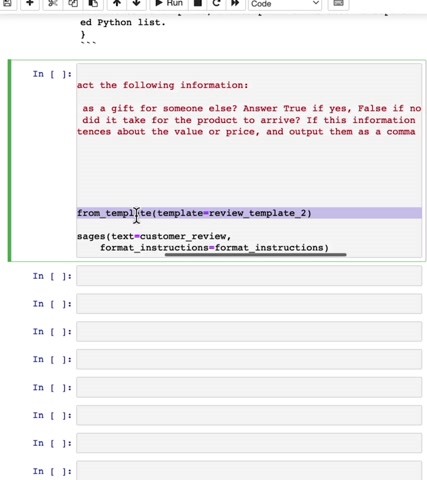

So here's a new review template and the review template includes the format instructions that lang chain generated and so can create a prompt from the review template to and then create the messages that will pass to the opening I end points .

If you want , you can take a look at the actual prompts which gives the instructions to extract the few gift delivery days , price value .

Here's the text and then here are the formatting instructions .

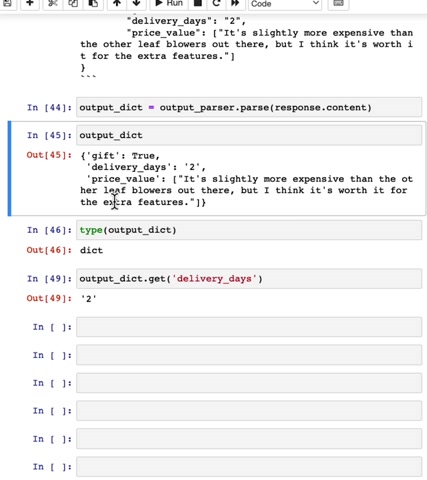

Finally , if we call the opening I end points let's take a look at what response we got .

It is now this and now if we use the output paser that we created earlier , you can then pass this into an output dictionary version I print looks like this and notice that this is um of type dictionary , not a string , which is why I can now extract the value associated with the key gift and get true or the value associated with delivery days and get two or you can also um extract the value associated with price value .

So this is a nifty way to take your L M output and parse it into a Python dictionary to make the output easier to use in downstream processing .

I encourage you to pause the video and run the code .

And so that's it for models , prompt and parsers with these tools , hopefully you'll be able to reuse your own prompt templates , easily share prompt templates with others that you're collaborating with .

Even use line chains built-in prompt templates which as you just saw can often be coupled with an output parser so that the input prompt to output in a specific format and then the parser pauses that output to store the data in a Python dictionary or some other data structure that makes it easy for downstream processing .

I hope you find this useful in many of your applications .

And with that , let's go into the next video where we'll see how landing can help you build better chat bots or have an L M have more effective chats by better managing what it remembers from the conversation you've had so far .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.