https://www.youtube.com/watch?v=T_kXY43VZnk

3D Gaussian Splatting for Real-Time Radiance Field Rendering

Hi , this is a short video presentation of 3D Gaussian splatting for real-time radiance field rendering .

We propose to use 3D Gaussian as a new representation for radiance fields .

We show that 3D Gaussian preserve desirable properties of continuous volumetric radiance fields .

While avoiding unnecessary computation in empty space , we start with a set of cameras and a point cloud provided by structure for motion during camera calibration .

Next , we optimize a set of 3D Gaussian to represent the scene .

Finally , we render transparent and isotropic Gaussian and back propagate their gradients to their properties .

After the optimization , the 3D Gaussian often take on extreme and isotropic properties to represent the very high frequency geometry like vegetation .

Gaussian's are a compact and fast representation .

In this example , we scale down their extent .

So we can see that the spoke of the bicycle can be represented with a handful of Gaussian .

Here we visualize the progress of the 3d Gaussian point cloud during optimization in a time lapse .

In summary 3D Gaussian are the first to achieve state of the art quality real time rendering and a fast training .

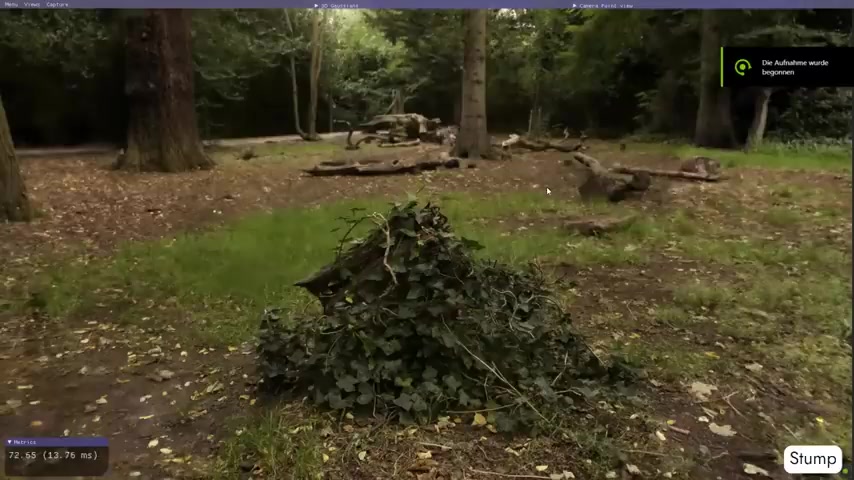

And all of these at the same time here we show some interactive sessions recorded in our lab with an A 6000 GP U .

Please note that real time rendering can also be achieved with less powerful hardware .

We ran an extensive evaluation and used multiple data sets .

Bina 360 tanks and temples , deep blending and nerve synthetic .

We also compared our algorithm against recent methods like BP 360 instant N GP and P nos in our quantitative evaluation , 3D Gaussian achieve overall equal and sometimes better quality than the best models that are slow to train and render while 3D Gaussian maintain fast training and an order of magnitude faster rendering .

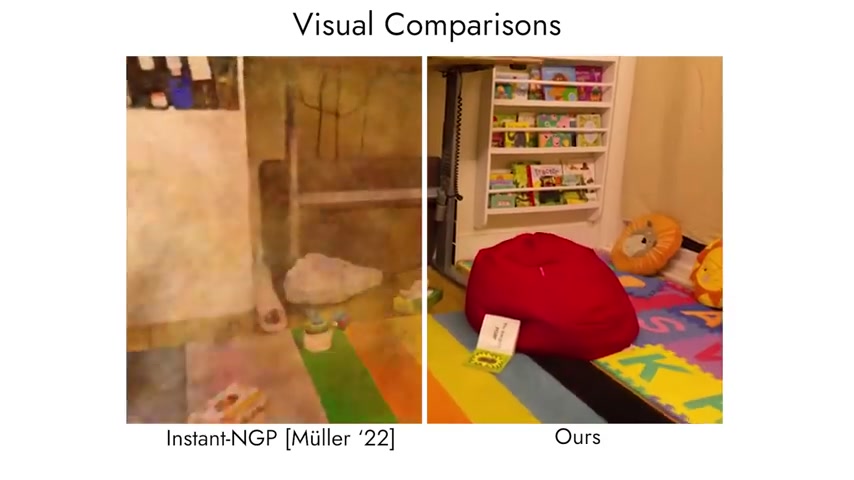

Here , we compare side by side with several algorithms .

In many cases , we are better than MIP 360 .

While rendering faster than 100 frames per second , we achieve higher visual quality than instant N GP with similar training times and fewer failure cases .

We also did a careful ablation study to evaluate the different design choices of our algorithm .

Here we show that even if we stop the training in 7000 iterations , which takes approximately six minutes , we retain great visual quality .

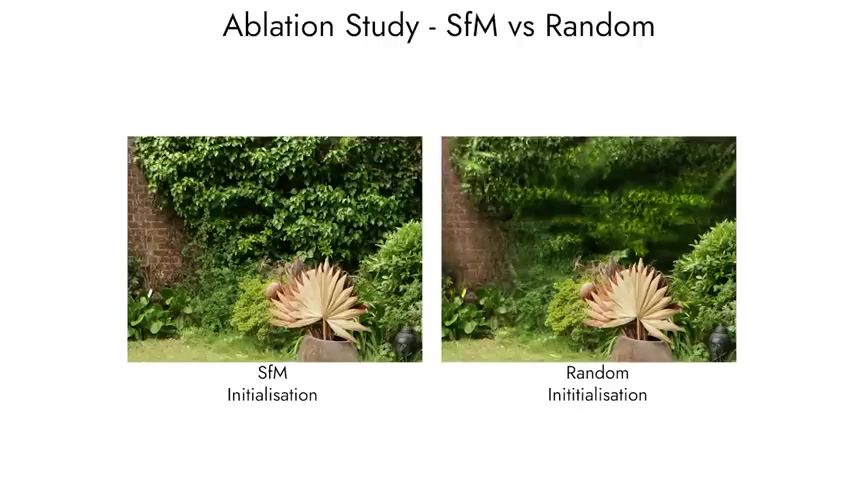

We also show what happens if instead of using the SFM point cloud for initialization , we initialize with a random set of points sampled uniformly in the scene .

Another important element of our method is the anisotropy of the 3D Gaussian , which has a big impact on the final quality .

Thank you for listening .

Please visit our website for the paper , the code release and all the supplemental material .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.