https://www.youtube.com/watch?v=FYgxLuhVzhw

New METAHUMANS are TOO REALISTIC in UNREAL ENGINE 5

So wouldn't it be great if we could have this level of defamation complexity but be able to evaluate in real-time.

Well, what we are now able to do is to use machine learning to compress that simulation data into a format that can be evaluated at run time.

So you are looking at our mal character with defamation driven by full muscle flesh and co from Houdini , it's running in real-time and on PS five is taking around 1/10 of a millisecond on CPU for network inference.

And as you see it here around one millisecond on GPU for morph target evaluation .

So this is a fully generalized model .

We have not just trained it on the cinematic animation we can see here.

So on the left is the nearest neighbor model with just the PC layer active .

And on the right , you can see how the additional nearest neighbor set improves the fold reconstruction.

So to help generate the optimal set of nearest neighbor poses, we have implemented a K means pose generator .

So given a set of target animations , so typically these will be your game or cinematic animations and A max pose value .

It will generate a set of poses that most efficiently cover that animation space .

We simulated our clothing for these additional poses in a Houdini and then we trained our additional nearest neighbors set using this data .

We would love for you to come and chat to us at a booth .

You can get hands on with this demo .

You can change cameras switch between muscular skeletal flesh and clothing layers .

You can pause the action and you can see the defamation result both with and without a moor form are running our guiding vision for meta human has been the democratization of complex character technologies allowing you to work faster and see the results immediately .

A character is only truly believable if its motion fidelity matches its visual fidelity .

But animating at this level is a hard task for even the most skilled studios , some of our best work leverage four D capture .

But this took specialized hardware in weeks or even months of processing time .

While me , a human creature gave you the ability to generate high quality characters .

Animating them still wasn't as easy .

This is why I'm very excited to announce a new capability to the Met a human product .

Me , a human animator , meta human animator contains the essence of our four D pipeline but optimized to run on a single machine .

It is able to use iphone as well as stereo professional systems .

And today we're going to demonstrate how it works for this .

We're going to need me our technician John Cook and just the phone .

Yeah , mel can you take your position ?

Please let me know when you're ready ?

Ok .

Ok .

And action I need performance capture to work like a mirror .

I need it to capture whether I'm acting scared or angry and sometimes all I need is a look cut .

Thanks Mel .

That was great .

Yeah , you're welcome .

Ok .

Our technician John is currently pulling mass performance from the phone onto his machine where everything will be processed locally .

We have updated our live link face mobile app to capture all data at the best resolution possible with the device .

Me human animator uses video and Deb data to convert this data into high fidelity performance animation and it can even use audio to produce convincing tongue animation .

John is currently struggling through the take to pick the section that he wants to process .

John .

Are we all good with the data ?

Awesome .

So from now on it's just a single button , click to kick off the processing which for a performance of this length will take less than a minute to convert into animation .

So Mel well that is processing .

Let me show you something else .

Oh Is that me ?

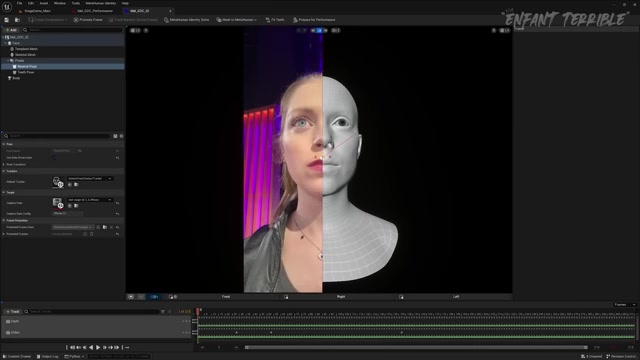

Yeah , this is what we refer to as your meta human DNA .

Cool and this is generated by the capture we made earlier , right ?

Yeah , that's right .

So from only three frames of video and that data , we can generate a rig that predicts all of your facial expressions in just a couple of minutes .

And do you only need to do this once for each actor ?

Yes , that's right .

It calibrates the solver to your face so that we can produce the performance in a way that faithful reproduces your original performance .

Sounds cool .

Yeah .

So let's check back on the , on the processing which today is on the latest C P and GP hardware from A MD .

Me human animator uses a custom epic facial solver and landmark detector .

We can interactively look at animation while it's being solved and compare it to your original performance .

So it looks like it just it's almost finished after this .

He is going to do one more pass to make the curves more stable , which is really quick .

And from here on , we can we just need to export the animation .

This takes only a few seconds and then John needs to drop it in the level and add the audio so that we can see the result .

So me me human should now be ready in the level .

Mel you excited to see the results .

I can't wait to see it .

I need performance capture to work like a mirror .

I need it to capture whether I'm acting scared or angry and sometimes all I need is a look .

Thank you .

So Mel , what do you think ?

I think it's incredible because it usually takes months between performance capture and getting any results back .

So this is blowing my mind and all of this is solved directly onto an meter framing controls .

In this case , we are using a Bespoke four D rig which we created together with N G theory for help by two .

But it's also ready to use on any medium or any other rig that follows our new Medicum standard .

Let's have a look at that .

I need performance capture to work like a mirror .

I need it to capture whether I'm acting scared or angry and sometimes all I need is a look .

So the same thing works even in stylized characters .

Thank you .

All these technologies are completely redefining our creative process as they will redefine yours .

One release me a human animator to everyone in just a couple of months .

We've got one more thing we'd like to show you .

We haven't forgotten about the need for full performance capture shoots .

What you're about to see is animation that has not been polished or edited in any way .

And it took me a human animator just minutes to process , start to finish .

Yeah .

So here's one of my favorite lines from Ninja Theory's upcoming Games saga .

Help Late two and I really hope you enjoy it and the rest of the show .

Thank you very much .

Thank you .

Yeah .

Sign Perth cut .

Take 13 .

Nice .

I see through your darkness .

Now I see three her eyes .

I will show them how to see us .

I do .

I will not appease your gods .

I will destroy them .

Good .

You like it .

Yeah .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.