Load from PC

LangChain_L6_v03

Sometimes people think of a large language model as a knowledge store as if it's learned to memorize a lot of information , maybe off the internet .

So when you ask the question , you can answer the question .

But I think uh even more useful way to think of a large language model is sometimes as a reasoning engine in which you can give it chunks of text or other sources of information .

And then the large language model LM will maybe use this background knowledge that learned off the internet .

But to use the new information , you give it to help you answer questions or reason through content or decide even what to do next .

And that's what LA chain's agents framework helps you to do .

Agents are probably my favorite part of LA chain .

I think they're also one of the most powerful parts , but they're also one of the newer parts .

So we're seeing a lot of stuff emerge here that's really new to everyone in the field .

And so this should be a very exciting lesson as we dive into what agents are , how to create and how to use agents , how to equip them with different types of tools like search engines that come built into lach chain and then also how to create your own tools so that you can let agents interact with any data stores , any API S any functions that you might want them to .

So this is exciting cutting edge stuff but already with emerging important use cases .

So of that , let's dive in .

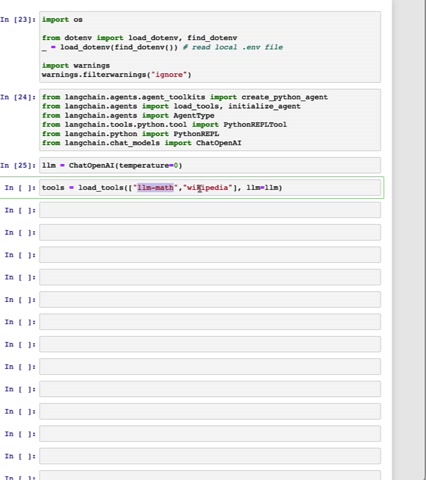

First , we're gonna set the environment variables and import a bunch of stuff that we will use later on .

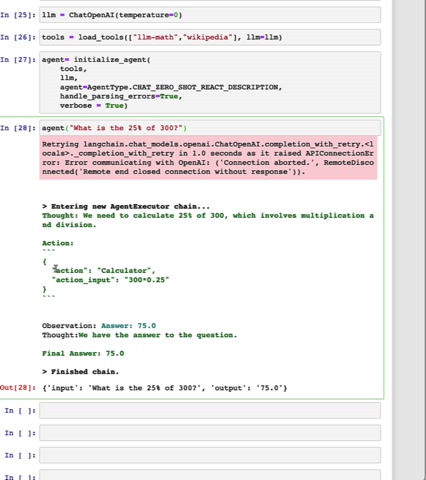

Next , we're gonna initialize a language model .

We're gonna use chat open A I .

And importantly , we're gonna set the temperature equal to zero .

This is important because we're gonna be using the language model as the reasoning engine of an agent where it's connecting to other sources of data and computation .

And so we want this reasoning engine to be as good and as precise as possible .

And so we're gonna set it to zero to get rid of any randomness that might arise .

Next , we're gonna load some tools .

The two tools that we're gonna load are the LLM math tool and the Wikipedia tool .

The LLM math tool is actually a chain itself which uses a language model in conjunction with a calculator to do math problems .

The Wikipedia tool is an API that connects to Wikipedia allowing you to run search queries against Wikipedia and get back results .

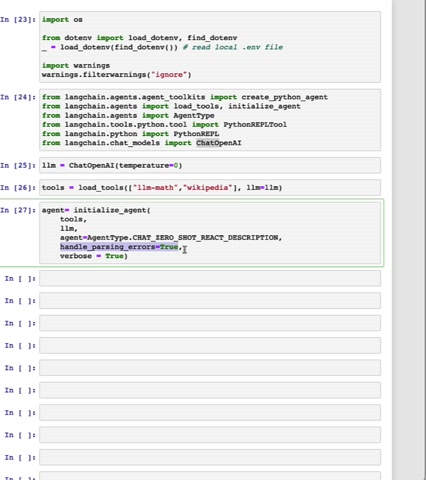

Next , we're gonna initialize an agent .

We're gonna initialize the agent with the tools , the language model and then an agent type here , we're gonna use chat zero shot react description .

The important things to note here are first chat , this is an agent that has been optimized to work with chat models .

And second react .

This is a prompting technique designed to get the best reasoning performance out of language models .

We're also gonna pass in handle , parsing errors equals true .

This is useful when the language model might output something that is not able to be parsed um into an action and action input , which is the desired output .

When this happens , we'll actually pass the mis formatted text back to the language model and ask it to correct itself .

And finally , we're gonna pass in verve Sequels true .

This is going to print out a bunch of steps that makes it really clear to us in the Jupiter notebook , what's going on ?

We'll also set debug equals true at the global level later on in the notebook .

So we can see in more detail what exactly is happening .

First , we're gonna ask the agent a math question , what is 25% of 300 ?

This is a pretty simple question , but it will be good to understand what exactly is going on .

So we can see here that when it enters the agent executor chain that it first thinks about what it needs to do .

So it has a thought , it then has an action and this action is actually a Json blob corresponding to two things , an action and an action input , the action corresponds to the tool to use .

So here is this calculator , the action input is the input to that tool .

And here it's a string of 300 times 0.25 .

Next , we can see that there's observation with answer in a separate color .

This observation answer equals 75.0 is actually coming from the calculator tool itself .

Next we go back to the language model .

When the text turns to green , we have the answer to the question , final answer 75.0 .

And that's the output that we get .

This is a good time to pause and try out different math problems of your own .

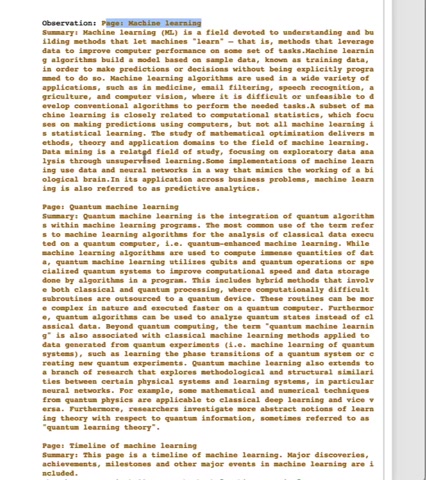

Next , we're gonna go through an example using the Wikipedia API .

Here we're gonna ask you the question about Tom Mitchell and we can look at the intermediate steps to see what it does .

We can see once again that it thinks and it correctly realizes that it should use Wikipedia .

It says action equal to Wikipedia and action input equal to Tom M Mitchell .

The observation that comes back in yellow this time and we use different colors to denote different tools is the Wikipedia summary result for the Tom and Mitchell page .

The observation that comes back from Wikipedia is actually two results , two pages .

As there's two different Tom and Mitchells , we can see the first one covers the computer scientist and the second one , it looks like it's an Australian footballer .

We can see that the information needed to answer this question .

Namely the name of the book that he wrote .

Machine learning is present in the summary of the first to Mitchell .

We can see next that the agent tries to look up more information about this book .

So it looks up machine learning book and Wikipedia , this isn't strictly necessary .

And it's an interesting example to show how agents aren't perfectly reliable .

Yet .

We can see that after this , look up , the agent recognizes that it has all the information it needs to answer and responds with the correct answer , machine learning .

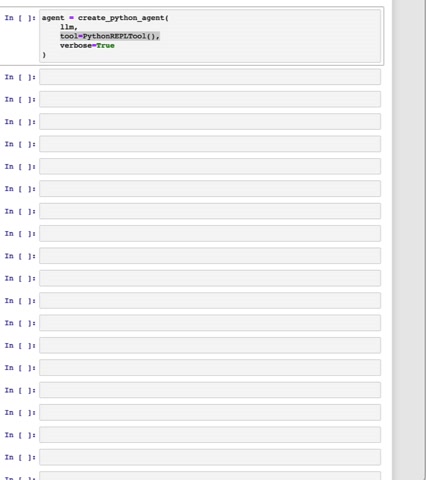

The next example we're gonna go through is a really cool one .

If you've seen things like co-pilot or even chat GP T with the code interpreter , plug and enabled , one of the things they're doing is they're using the language model to write code and then executing that code and we can do the same exact thing here .

So we're gonna create a Python agent and we're gonna use the same LLM as before .

And we're gonna give it a tool .

The Python repel tool , a repel is basically a way to interact with code .

You can think of it as a Jupiter notebook .

So the agent can execute code with this repel , it will then run and then we'll get back some results and those results will be passed back into the agent .

So it can decide what to do next .

The problem that we're gonna have this agent solve is we're gonna give it a list of names and then ask it to sort them .

So you can see here , we have a list of names , Harrison Chase lane chain LLM Jeff Fusion Transformer Jen A I .

And we're gonna ask the agent to first sort these names by last name and then first name and then print the output .

Importantly , we're asking it to print the output so that it can actually see what the result is these printed statements are , what's gonna be fed back into the language model later on .

So it can reason about the output of the code that it just ran .

Let's give this a try .

We can see that when we go into the agent executor chain , it first realizes that it can use the sorted function to list the customers .

It's using a different agent type under the hood , which is why you can see that the action and action input is actually formatted slightly differently here .

The action that it takes is to use the Python Rale .

And then the action input that you can see is code where it first writes out customers equals this list .

It then sorts the customers and then it goes through this list and print it .

You can see the agent thinks about what to do and realizes that it needs to write some code , the format that it's using of action and action input is actually slightly different than before .

It's , it's using a different agent type under the hood .

For the action , it's gonna use the Python ripple and for the action input , it's gonna have uh a bunch of code .

And so if we look at what this code is doing , it's first creating a variable to list out these customer names .

It's then sorting that and creating a new variable and it's then iterating through that new variable and printing out each line just like we asked it to , we can see that we get the observation back and this is a list of names and then the agent realizes that it's done and it returns these names .

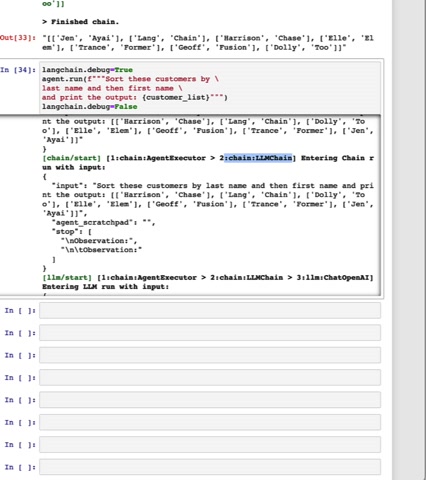

We can see from the stuff that's printed out the high level of what's going on .

But let's dig a little bit deeper and run this with lane chain debug set to true as this prints out all the levels of all the different chains that are going on .

Let's let's go through them and see what exactly is happening .

So first we start with the agent executor .

This is the top level agent runner and we can see that we have here our input sort these customers by last name and then first name and then print the output from here .

We call an LLM chain .

This is the LM chain that the agent is using .

So the LM chain remember is a combination of prompt and an LL MS .

So at this point , it's only got the input , an agent scratch pad .

We'll get back to that later and then some stop sequences to tell the language model when to stop doing its generations .

At the next level , we see the exact call to the language model .

So we can see the fully formatted prompt , which includes instructions about what tools it has access to , as well as how to format its output from there .

We can then see the exact output of the language model .

So we can see the text key where it has the thought and the action and the acronym put all in one string , it then wraps up the LLM chain as it exits through there .

And the next thing that it calls is a tool .

And here we can see the exact input to the tool .

We can also see the name of the tool , Python ripple .

And then we can see the input , which is this code , we can then see the output of this tool which is this printed out string .

And again , this happens because we specifically ask the Python Rale to print out what is going on .

We can then see the next input to the LM chain , which again the LM chain here is the agent .

So here if you look at the variables , there's the input , this is unchanged .

This is the high level objective that we're asking .

But now there's some new values for agent scratch pad , you can see here that this is actually a combination of the previous generation plus the tool output .

And so we're passing this back in so that the language model can understand what happened previously .

And use that to reason about what to do next .

The next few print statements are covering what happens as the language model realizes that it is basically finished with its job .

So we can see here the fully formatted prompt to the language model , the response where it realizes that it is done .

And it says final answer , which here is the sequence that the agent uses to recognize that it's done with its job .

We can then see it exiting the LLM chain and then exiting the agent executor .

This should hopefully give you a pretty good idea of what's going on under the hood inside these agents .

This should hopefully give you a pretty good idea of what's going on under the hood and is hopefully instructive as you pause and , and , and put your own objectives for this coding agent to try to accomplish this debug mode can also be used to highlight what's going wrong as shown above in the Wikipedia example , sometimes agents act a little funny .

And so having all this information is really helpful for understanding what's going on .

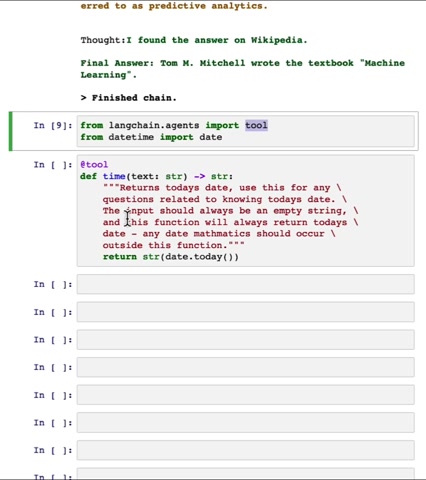

So far , we've used tools that come defined in Linkin already .

But a big power of agents is that you can connect it to your own sources of information , your own API S your own data .

So here we're gonna go over how you can create a custom tool so that you can connect it to whatever you want .

Let's make a tool that's gonna tell us what the current date is .

First , we're gonna import this tool decorator .

This can be applied to any function and it turns it into a tool that link chain can use .

Next , we're gonna write a function called time which shakes in any text string .

We're not really going to use that and it's gonna return today's date by calling date time .

In addition to the name of the function , we're also going to write a really detailed doctrine .

That's because this is what the agent will use to know when it should call this tool and how it should call this tool .

For example , here we say that the input should always be an empty string .

That's because we don't use it .

If we have more stringent requirements on what the input should be .

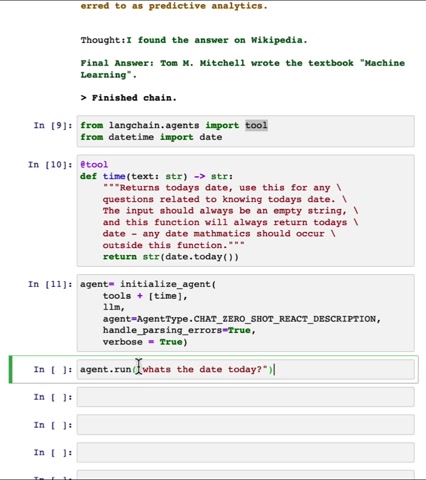

For example , if we have a function that should always take in a search query or a SQL statement , you'll want to make sure to mention that here , we're now gonna create another agent .

This time , we're adding the time tool to the list of existing tools .

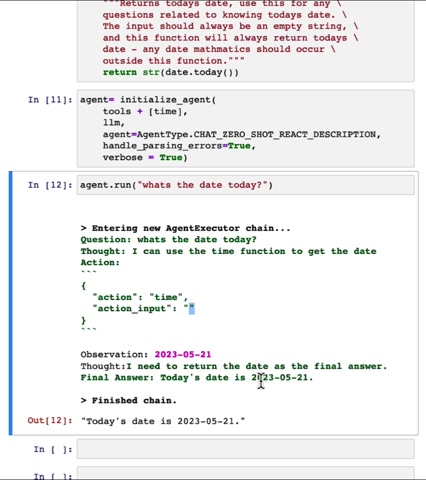

And finally , let's call the agent and ask it what the date today is .

It recognizes that it needs to use the time tool which it specifies here .

It has the action input as an empty string .

This is great .

This is what we told it to do and then it returns with an observation .

And then finally , the language model takes that observation and responds to the user .

Today's date is 2023 05 21 .

You should pause the video here and try putting in different inputs .

This wraps up the lesson on agents .

This is one of the newer and more exciting and and more experimental pieces of LA chain .

So I hope you enjoy using it .

Hopefully , it showed you how you can use a language model as a reasoning engine to take different actions and connect to other functions and data sources .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.