https://www.youtube.com/watch?v=3Ar1ABlD_Vs

Create Your First AWS Lambda Function _ AWS Tutorial for Beginners

Hi , friends .

Thanks for tuning in to learn about Aws Lambda .

Let's spend just a couple of minutes talking about some theory , what it is , why you'd want to use it and then we'll dive into some hands on .

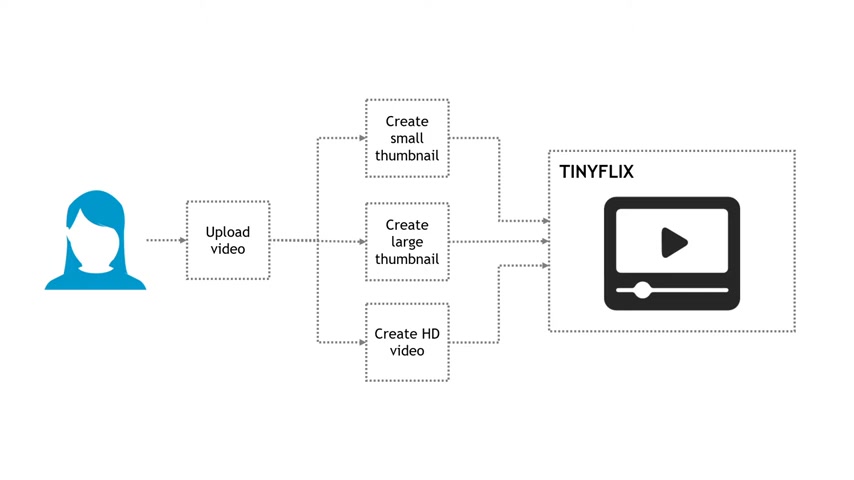

Imagine you're working on a project called Tiny Flicks .

A platform that caters specifically to short films under five minutes .

The basic flow looks like this .

A user uploads a video and then the application needs to do a few things .

Create a small thumbnail for the video , a large thumbnail and then also create an H D version of the video .

And once all that's done , the video will display on a page where users can watch it .

Let's talk about how Aws Lambda might work in this scenario .

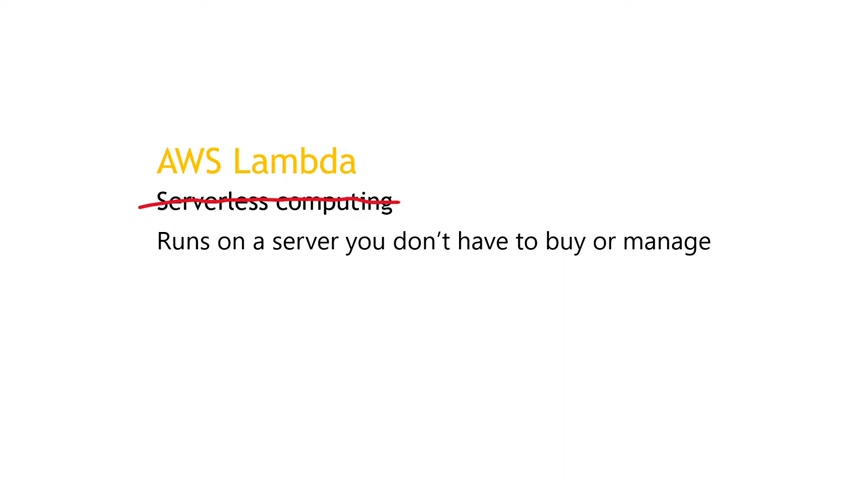

First , you've probably heard that Aws Lambda is serverless computing .

But what exactly does that mean ?

Obviously , something has to run your code , right ?

So it can't really be serverless .

Well , I think it's helpful to say that it runs on a server that you don't have to buy or manage .

It's still running on a server though , but all of the underlying work of provisioning that server setting it up allocating memory and so forth .

You don't have to worry about that .

The server stuff , it just magically happens in the background .

So it runs on a server .

You don't have to buy or manage what exactly runs though .

Well , code a piece of code like a chunk of javascript or Python or dot net or what have you .

And each Lambda function will do a discrete task .

Now , when does this code run ?

It'll run in response to some event like something gets uploaded to an S3 bucket or a change in a dynamo DB table happens .

For example , that triggers your lambda function to run and do its thing .

And just to wrap up this section on definition , some people suggest a better name would actually be aws scripts or functions if that helps you understand a little bit better , what they do .

So small pieces of code that do a specific thing and triggered by some event back to our scenario of tiny flicks and what needs to happen when a user uploads a video .

These three tasks here in the middle are actually perfect for Lambda functions .

We upload the video , it hits the S3 bucket that will trigger these functions to run and create the thumbnails and process an H D version of the video .

Just a quick review of benefits here .

When you use Lambda , it really forces you to structure your code in a way that's modular where you have short scripts that do just a single thing which leads to the second benefit , which is that you can fine tune the amount of memory a function needs , which can help you optimize performance and also reduce your costs .

And finally kind of related to that first point of having each lambda function do just one thing .

This usually means that your application can also support parallel processing , meaning you can do 100 things at a time when you need to .

But at whatever point that jumps to needing to do a million things at a time , the underlying compute power can support that and it's all handled for you .

And then when you only need 100 things at a time , again , the things can be scaled down .

So scaling up and scaling down is a breeze .

All right .

With that .

Out of the way , let's do a hands on demo .

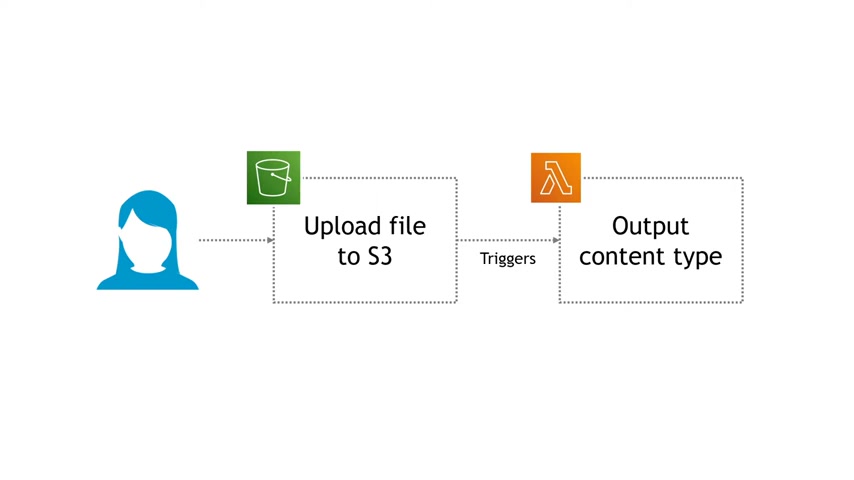

We aren't going to build out the full functionality of a tiny Flix project , but we'll do the basics where we upload a file to S3 that'll trigger our Lambda function and the Lambda function will output the content type of the thing that we uploaded , whether that's a video , an image , a text file , that kind of thing .

Now , a quick word about price , if you're going to be following along here here on the main Lambda page , there's a link to the AWS free tier and you'll see that you get one million free requests per month and there's additional details here .

We definitely won't be hitting any of those limits with this demo .

But if you're going on to build something bigger , perhaps for your job , just make sure you're aware of the additional pricing details down here .

So check that out .

All right now over to the AWS management console .

If you need to know how to set up an account and to get here , check out the video linked above .

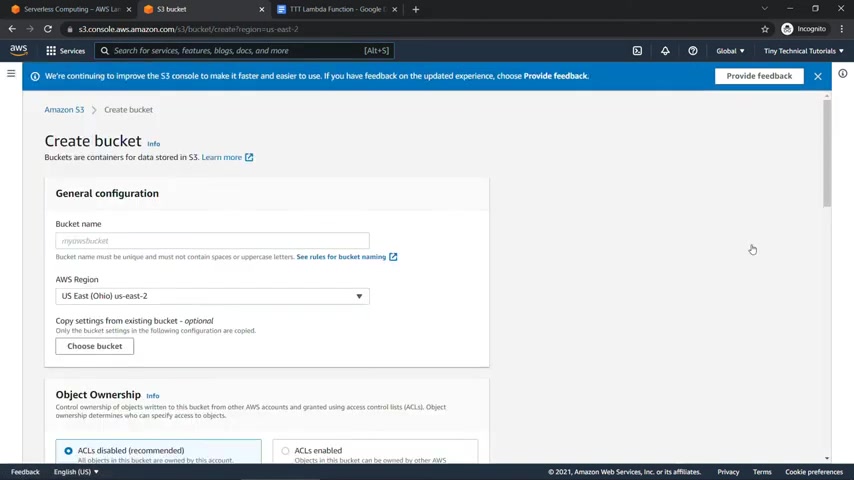

But we're gonna start in S3 , the simple storage service .

This is where we're going to be uploading our files and we want that to trigger our Lambda function .

So we'll create a new bucket .

I'll call this tiny flicks and then today's date for region you can pick what you want but just one potential gotcha .

This does need to be the same region where your Lambda function is .

So just keep that in mind .

I'm gonna go with us East two and then everything else will just leave the defaults and say create bucket .

I do also have an S3 video .

Check out that link above if you want to learn more .

OK ?

Then clicking into our bucket here , we'll upload something in just a minute .

But first let's go work on our Lambda function .

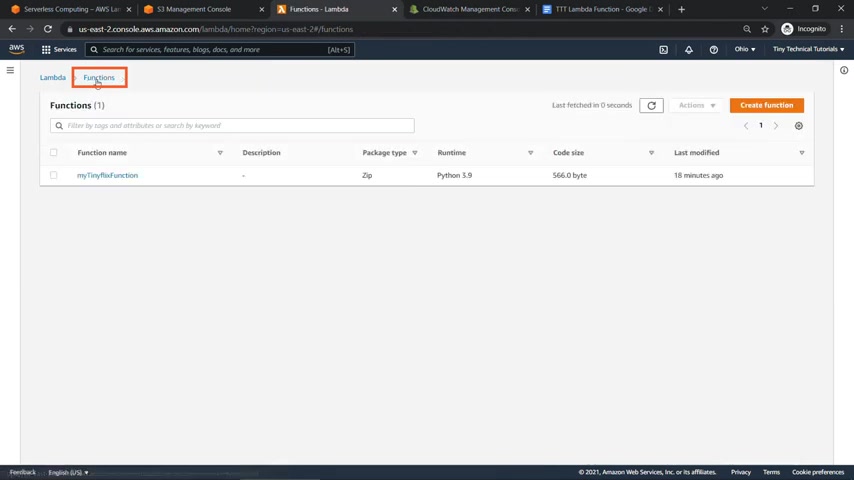

So I'll just open up a new tab here in the console and we'll go to LAMBDA .

I don't have any functions at the moment , but really easy to create one .

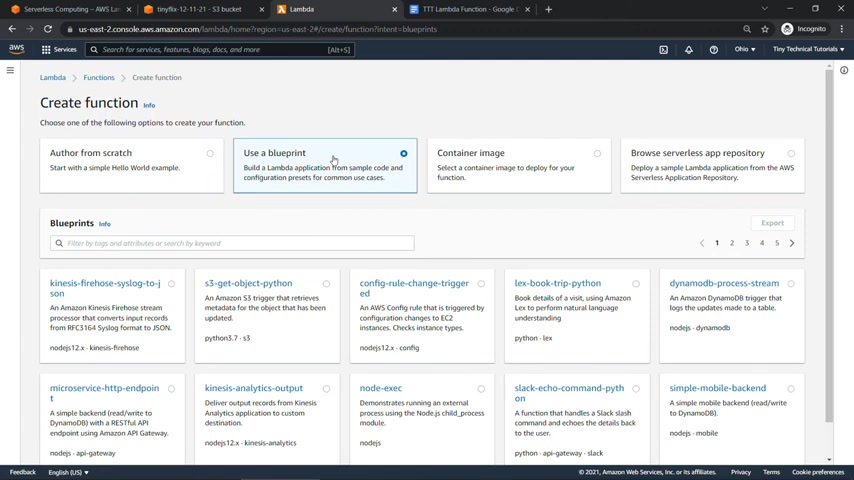

So create function and then you've got a few different options on how you're going to create it .

We're going to start from scratch just because I think it's really helpful to kind of learn what's going on behind the scenes .

But there are some other things you can use here like a blueprint .

This will give you some sample code for common use cases .

There is actually one that works with an S3 trigger here .

If you want to try that out on your own , there's also container images , an app repository and so on .

But let's start from scratch first .

We need to give our function a name .

I'll call this my tiny flicks function for runtime .

There's several options for languages here .

I'm gonna go with Python 3.9 , but you'll see that you can also use dot net , Java Ruby and so on scrolling down .

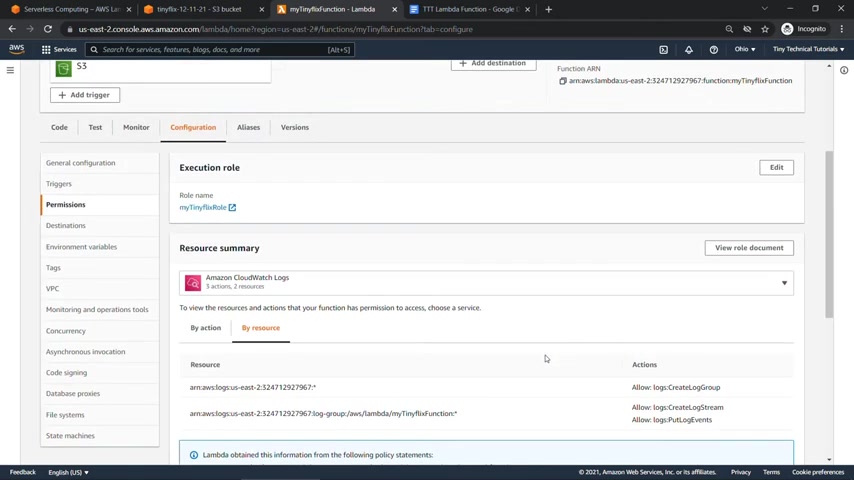

I'll leave the defaults for architecture , but we will need to update permissions .

You see by default , Lambda will create an execution role with permissions to upload logs to Cloudwatch .

We'll take a look at those a little bit later , but we need to modify this to give permissions to read from the S3 bucket as well .

So here we're going to say , create a new role from AWS policy templates , role name , I'll say my tiny flicks role and then policy templates .

These are optional .

But for what we're doing , we need one for S3 .

So I'll just type in S3 here to filter down and we'll do Amazon S3 object read only permissions and that's selected there , there's some additional settings , but we're just going to go with all the defaults and say create function and success .

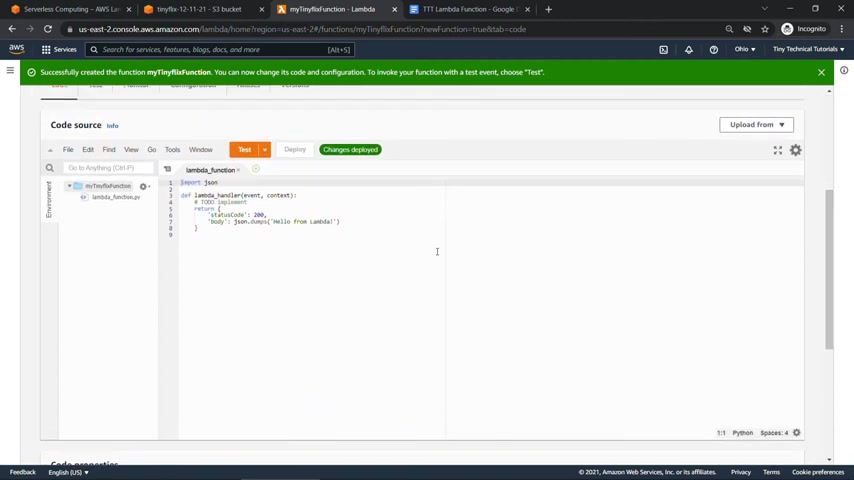

So let's scroll down here to look at the code .

This is just some boilerplate code .

Hello from LAMBDA .

I'm going to replace this with some other code though that I have uploaded and linked down in the description .

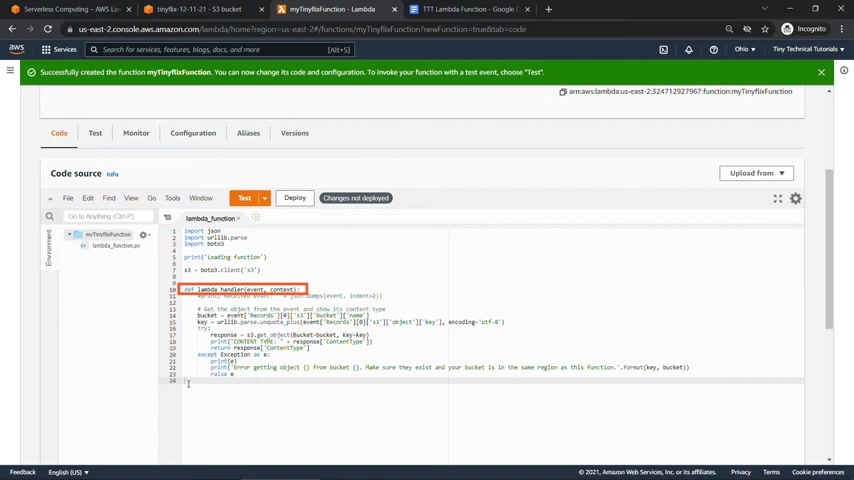

I actually got this code from one of the blueprints that's available for Lambda .

I'll copy this and just replace everything in here .

Still pretty simple code here .

So how this works when Lambda invokes this function , the Lambda run time is going to pass in two arguments to the handler here .

That'll be event and context , event is a JSON formatted document that has data about your function to process .

For example , this is where we're going to get information about the S3 bucket where we upload our file .

And then the second argument context this has methods and properties where you can get information about the runtime environment , the function and the invocation .

Basically all we're doing here , we're grabbing the bucket information from the event and then the specific object or file we uploaded and then we're going to print out its content type such as a video file , an image , a text file and so on .

So pretty simple .

Now , very , very importantly , any time you change your code here , you need to deploy it So we'll hit deploy and we should be good to go .

All right .

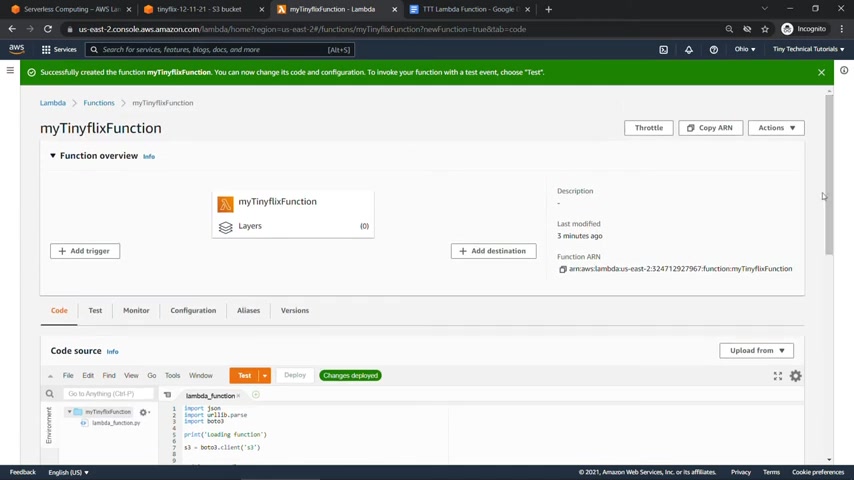

So we have our piece of code remembering back to our definition though .

It's a piece of code that's triggered by some event .

So we need to add a trigger here and that's gonna be when a new file is uploaded to the S3 bucket .

So over here , we'll say add trigger , select the trigger , I'll type in S3 to filter that down .

And then we need to choose the bucket that we created earlier .

That was tiny flicks and the date and then the specific event that we're gonna be listening for , we'll leave it at all objects create .

But there's other things in here as well such as when something gets deleted or restored from glacier for instance , but we'll go with all object create events , leave everything else the same and then just make sure you check this down here .

This doesn't really apply to us .

But it's basically saying if you have an input bucket like our tiny flick bucket and then you also output something to that every time you output , that's gonna trigger the input again , you'll basically get into this recursive loop .

So best practice to have a separate input and output bucket .

But again , we're not doing the output .

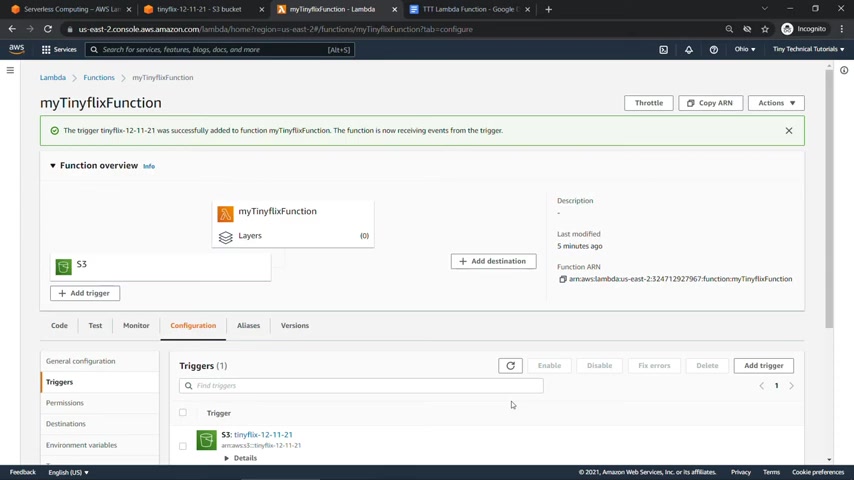

So we'll click that OK and hit add OK , trigger successfully added .

Now it's not super obvious but adding that trigger wired up a couple of things on the back end that are going to enable all of this to work .

So if we come over to the S3 bucket here , come into properties and scroll down .

You'll see now here under event notifications that we're wired up for the event to notify the lamb to function when things are created .

So that just automatically happened because we added that trigger in the function and then back on the lambda side here under configuration and then permissions if we scroll down a little bit open up this resource based policy , this policy says that our S3 bucket , the tiny flicks with the date is allowed to invoke this Lambda function .

So again , all of this is happening for you because we added that trigger .

So super easy .

All right , there's some additional things here under configuration .

They're a little bit more advanced for what we're doing for what we need .

We just have the code , we have our trigger and now we need to go upload something to the three bucket to make sure this works .

So back to the bucket here and we'll take a look at objects .

There's nothing in here at the moment .

So let's go ahead and upload here on my desktop .

I've got my logo and image file just because this will upload a lot faster than a video , I'll drag this over and then we'll hit upload .

And as we do that , that should trigger our Lambda function .

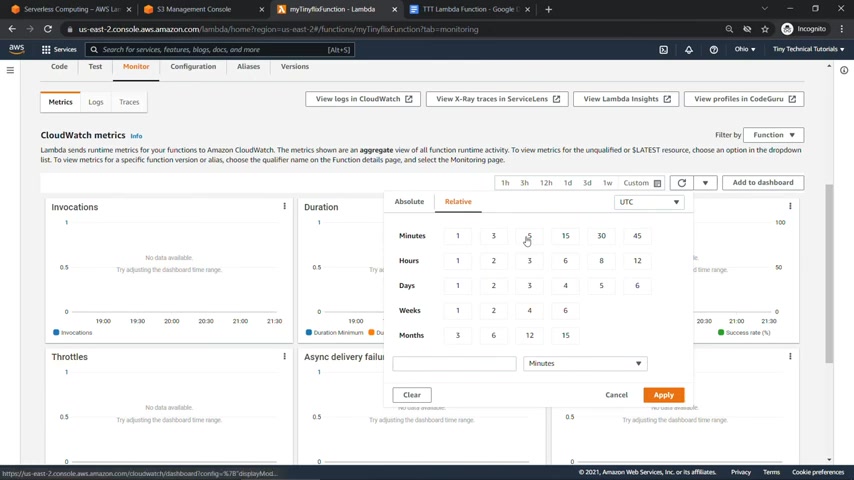

So back to LAMBDA , we want to come in to monitor here and here's where you can view your Cloudwatch metrics and it might take a minute or two for this to come in .

So , don't worry if it's not showing up immediately , you can adjust your time frame here though .

Let's say we want to just look at things that have happened in the last five minutes and you can refresh .

We'll give this a minute to come in while we're waiting though .

If you're finding this helpful so far , I'd really appreciate you hitting that like button so it can reach more people and also consider subscribing for more Aws content like this .

All right , I'll hit refresh again .

And what we're looking for is invocations over here on the left should be one , meaning we invoked our lamb of function once because we uploaded that one file .

I'll hit refresh again and there you go .

There's the one invocation , the duration in milliseconds success rate was one .

We didn't have any errors and there's some additional things here as well .

If you come into logs over here , you may also need to hit refresh and more information will show up here .

You can click into the log stream .

This will take you to the Cloudwatch console and you'll see here the content type .

This is what our function was output is image or PNG .

So that's the file that we uploaded to S3 and there you go .

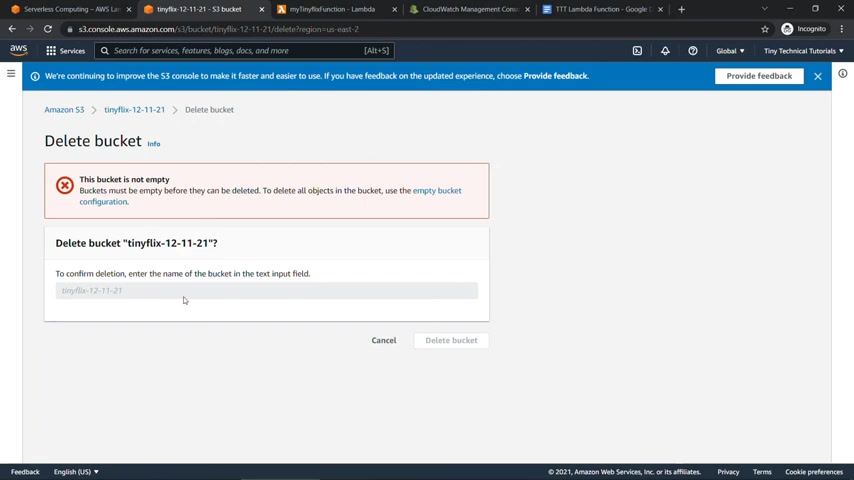

Now , if you're following along , you might want to just go delete your bucket here back in S3 .

I'll select this and delete .

Now , the bucket is not empty .

So you'll need to use the empty bucket configuration to clear out all of the files .

You need to confirm by typing permanently delete .

And then once that's done , you can go to delete bucket configuration which will delete the bucket , just type in the name of your bucket .

And that should be good back to Lambda .

If you come up to your functions , you can delete this function just by coming up to action and delete , you're not gonna be charged for anything .

If you just leave it here , you only get charged when your code is running , but just to keep things tidy , I'll get rid of that as well .

So there you have it .

Your first Lambda function that works with an S3 trigger .

Thanks so much for watching and I hope to see you in the next video .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.