https://youtu.be/XhLlRS2-BO8?si=WJQ37x7XWeOTTFt4

OpenAI & Microsoft's HUGE AI Announcements (Dev Day Supercut)

Good morning .

Welcome to our first ever open a DEV day .

About a year ago , November 30th , we shipped chat GP T as a low key research preview and that went pretty well .

We followed that up with the launch of GP T four , still the most capable model out in the world .

And in the last few months , we launched voice and vision capabilities so that chat GP T can now see , hear and speak .

And more recently we launched Dolly three , the world's most advanced image model .

You can use it .

Of course , inside of chat GP T for our enterprise customers .

We launch chat G BT enterprise which offers enterprise grade security and privacy , higher speed GP T four access , longer context windows .

A lot more today , we've got about 2 million developers building on our API for a wide variety of use cases , doing amazing stuff .

Over 92% of fortune 500 companies building on our products .

And we have about 100 million weekly active users now on chat C BT .

And what's incredible on that is we got there entirely through word of mouth .

People just find it useful and tell their friends open A I is the most advanced and the most widely used A I platform in the world now .

So now on to the new stuff and we have got a lot today , we are launching a new model GP T four turbo .

We've got six major things to talk about for this part .

Number one context length , a lot of people have tasks that require a much longer context length GP T four supported up to eight K .

And in some cases up to 32 K context length GP T four turbo supports up to 100 and 28,000 tokens of context .

That's 300 pages of a standard book 16 times longer than our eight K context .

And in addition to a longer context length , you'll notice that the model is much more accurate over a long context .

Number two more control , we've heard loud and clear that developers need more control over the models , responses and outputs .

So we've addressed that in a number of ways , we have a new feature called JSON mode which ensures that the model will respond with valid JSON .

The model is also much better at function calling .

You can now call many functions at once and it'll do better at following instructions .

In general , we're also introducing a new feature called reproducible outputs .

You can pass the C parameter and it'll make the model return consistent outputs this of course , gives you a higher degree of control over model behavior .

This rolls out in beta today .

All right .

Number three , better world knowledge .

We're launching a retrieval in the platform .

You can bring knowledge from outside documents or databases into whatever you're building .

We're also updating the knowledge cut off .

We are just as annoyed as all of you .

Probably more that GP D four's knowledge about the world ended in 2021 .

We will try to never let it get that out of date again .

GP T four , Turbo has knowledge about the world up to April of 2023 and we will continue to improve that over time .

Number four , new modalities , surprising no one dolly three GP T four Turbo with vision and the new text to speech model are all going into the API today , G BT four Turbo can now accept images as inputs via the API can generate captions , classifications and analysis .

For example , be my eyes uses this technology to help people who are blind or have low vision with their daily tasks like identifying products in front of them .

And with our new text to speech model , you'll be able to generate incredibly natural sounding audio from text in the API with six preset voices to choose from .

I'll play an example .

Did you know that Alexander Graham Bell ?

The eminent inventor was enchanted by the world of sounds .

His ingenious mind led to the creation of the gramophone which etches sounds onto wax , making voices , whisper through time , this is much more natural than anything else we've heard out there .

Voice can make apps more natural to interact with and more accessible .

It also unlocks a lot of use cases like language learning and voice assistant .

Speaking of new modalities , we're also releasing the next version of our open source speech recognition model whisper V three today and it'll be coming soon to the API it features improved performance across many languages and we think you're really gonna like it .

Ok .

Number five customization , you may want a model to learn a completely new knowledge domain or to use a lot of proprietary data .

So today , we're launching a new program called custom models with custom models .

Our researchers will work closely with the company to help them make a great custom model , especially for them and their use case using our tools in the interest of expectations .

At least initially , it won't be cheap .

But if you're excited to push things as far as they can currently go , please get in touch with us and we think we can do something pretty great .

OK ?

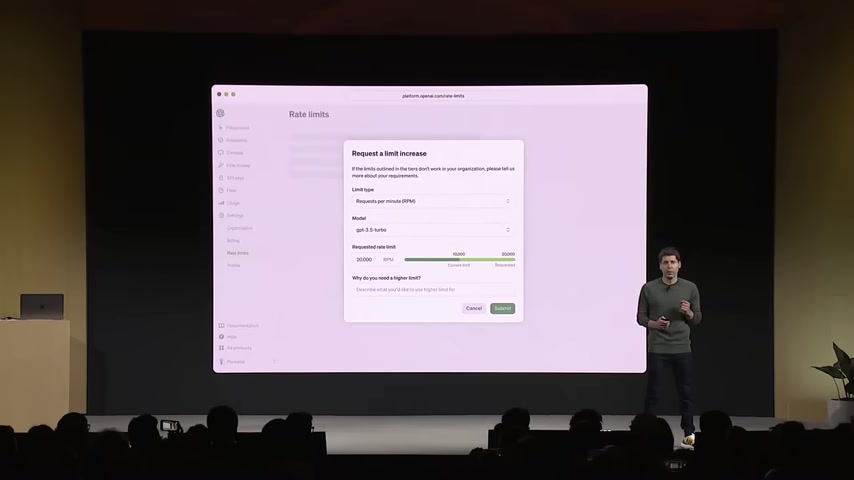

And then number six higher rate limits , we're doubling the tokens per minute for all of our established GP T four customers , so that it's easier to do more .

In addition to these rate limits , we're introducing copyright shields , copyright shield means that we will step in and defend our customers and pay the costs incurred if you face legal claims around copyright infringement .

And this applies both to chat GP T Enterprise and the API .

And let me be clear , this is a good time to remind people .

We do not train on data from the API or Chat G BT Enterprise ever .

There's actually one more developer request that's been even bigger than all of these .

And that's pricing .

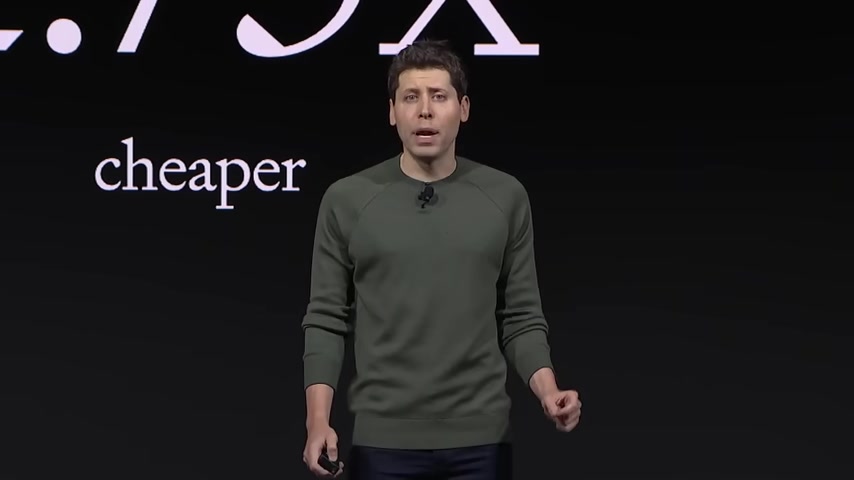

And G BT four turbo , a better model is considerably cheaper than G BT four by a factor of three X for prompt tokens and two X for completion tokens starting today .

The new pricing is one cent per 1000 prompt tokens and three cents per 1000 completion tokens for most customers that will lead to a blended rate more than 2.75 times cheaper to use for GP T four turbo than GP T four .

We worked super hard to make this happen .

We hope you're as excited about it as we are in all of this .

We're lucky to have a partner who is instrumental in making it happen .

So I'd like to bring out a special guest , Satya Nadella , the CEO of Microsoft .

Thank you so much .

Thank you , Satya .

Thanks so much for coming here .

How are , how is Microsoft thinking about the partnership currently fust ?

We love you guys .

Look , it's , it's , it's been fantastic for us .

You guys have built something magical .

I mean , quite frankly , there are two things uh for us when it comes to the partner .

The first is these workloads , it's just so different .

And you , I've been in this infrastructure business for three decades .

No one has ever seen infrastructure and the workload , the pattern of the workload .

These training jobs are so synchronous and so large and so data parallel .

And so the first thing that we have been doing is building in partnership with you the system all the way from thinking from power to the DC to the rack , to the accelerators to the network .

And just , you know , really the shape of Azure is drastically changed and is changing rapidly in support of these models that you're building .

And so our job number one is to build the best system so that you can build the best models and then make that all available to developers .

Great .

And how do you think about the future , future of the partnership or future of A I or whatever ?

There are a couple of things for me that I think are going to be very , very key for us , right ?

One is I just described how the systems that are needed as you aggressively push forward on your road map requires us to be on the top of our game .

And we intend fully to commit ourselves deeply to making sure you all as builders of these foundation models have not only the best systems for training and inference , but the most compute so that you can keep pushing on the frontiers .

And then of course , we are very grounded in the fact that safety matters and safety is not something that you care about later , but it's something we do shift left on and we are very , very focused on that with you all .

Great .

Well , I think we have the best partnership in tech .

I'm excited for us to build a together .

I'm really excited to have a friend .

Thank you very much for coming .

OK .

Even though this is developer conference , we can't resist making some improvements to chat GPT .

So a small one , chat GP T now uses GP T four turbo with all the latest improvements , including the latest knowledge cut off , which will continue to update , that's all live today .

It can now browse the web when it needs to write and run code , analyze data , take and generate images and much more .

And we heard your feedback .

That model picker extremely annoying .

That is gone .

Starting today .

You will not have to click around the drop down menu chat .

G BT will just know what to use and when you need it .

But that's not the main thing I wanna talk about where we're headed and the main thing we're here to talk about today , we know that people want A I that is smarter , more personal , more customizable can do more on your behalf .

Eventually you'll just ask a computer for what you need and it'll do all of these tasks for you .

These capabilities are often talked in the A I field about as agents , the upsides of this are going to be tremendous .

So today , we're taking our first small step that moves us towards this future .

We're thrilled to introduce GP TS GP TS are tailored versions of Chat GPT for a specific purpose .

You can build a GP T A customized version of Chat GP T for almost anything with instructions , expanded knowledge and actions and then you can publish it for others to use .

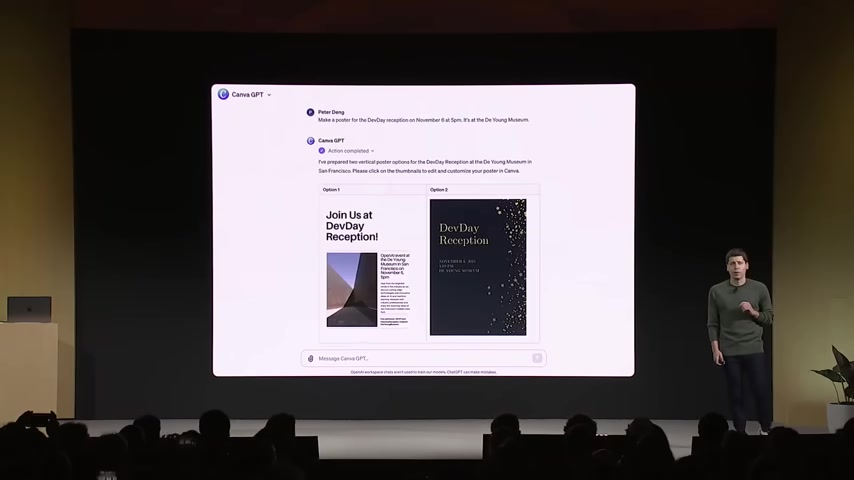

So first let's look at a few examples , Canva has built a GP T that lets you start designing by describing what you want in natural language .

If you say make a poster for DEV DEV day reception this afternoon this evening and you give it some details .

It'll generate a few options to start with by hitting canvas API S .

Now this concept may be familiar to some of you .

We've evolved our plugins to be custom actions for GP TS .

You can keep chatting with this to see different iterations .

And when you see when you like , you can click through to Canva for the full design experience .

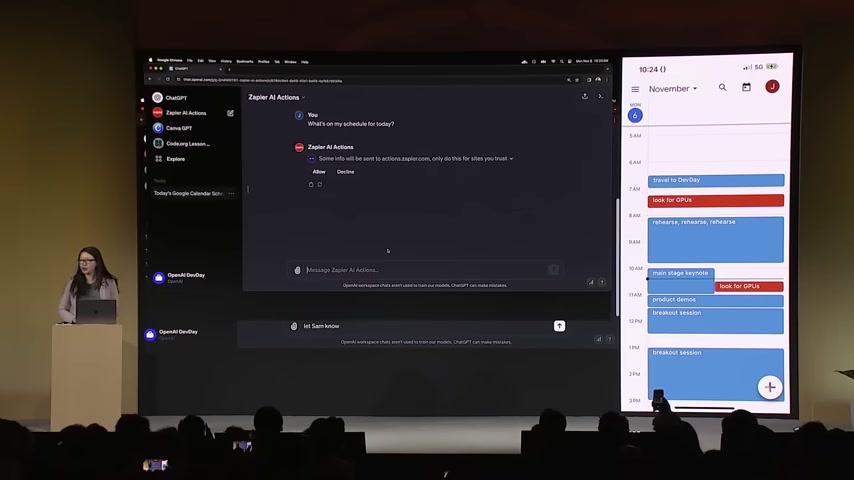

So now we'd like to show you a GP T live zap year has built a GP T that , that lets you perform actions across 6000 applications to unlock all kinds of integration possibilities .

I'd like to introduce Jessica , one of our solutions architects who is going to drive this demo .

Welcome , Jessica .

My name is Jessica Shay I work with partners and customers to bring their product alive .

And today I , I can't wait to show you how hard we've been working on this .

So to start where your G BT will live is on this upper left corner , I'm gonna start with clicking on the zap A a actions .

And on the right hand side , you can see that's my calendar for today to start .

I can ask what's on my schedule for today .

We build G BT s with security in mind .

So before it performs any action or share data , it will ask for your permission .

So right here , I'm gonna say aloud .

So G BT is designed to take in your instructions , make the decision on which capability to call to perform that action and then execute that for you .

So you can see right here it's already connected to my calendar .

It pulls into my , my information and then I've also prompted it to identify conflicts on my calendar .

So you can see right here .

It actually was able to identify that .

So it looks like I have something coming up .

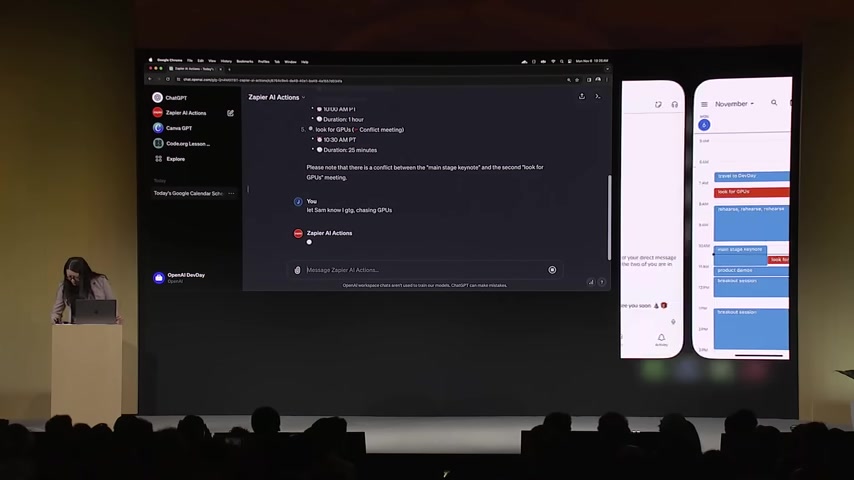

So what if I wanna let Sam know that I have to leave early ?

So right here I say , let Sam know I gotta go um chasing GP us .

So with that , I'm gonna swap to my conversation with Sam and then I'm gonna say yes , please run that Sam .

Did you get that ?

I did ?

Awesome .

So this is only a glimpse of what is possible and I cannot wait to see what you all will build .

Thank you and back to you , Sam .

Thank you Jessica .

So , and later this month , we're going to launch the GPT store , you can list a GPT there and we'll be able to feature the best and the most popular GP TS .

Of course , we'll make sure that GPT S in the store follow our policies before they're accessible .

Revenue sharing is important to us .

We're going to pay people who build the most useful and the most used GPT S A portion of our revenue .

We're excited to foster a vibrant ecosystem with the GP T store .

Just from what we've been building ourselves over the weekend , we're confident there's gonna be a lot of great stuff .

We're excited to share more information soon .

So those are GP TS and we can't wait to see what you'll build .

These experiences are great , but they have been hard to build sometimes taking months , teams of dozens of engineers , there's a lot to handle to make this custom assistant experience .

So today , we're making it a lot easier with our new assistance .

API the assistance API includes persistent threads .

So they don't have to figure out how to deal with long conversation history built in retrieval code interpreter , a working Python interpreter in a sandbox environment .

And of course , the improved function calling that we talked about earlier .

So we'd like to show you a demo of how this works .

And here is Rahman , our head of developer experience today , we're launching new modalities in the API but we are also very excited to improve the developer experience for you all to build assistive agents .

So let's dive right in .

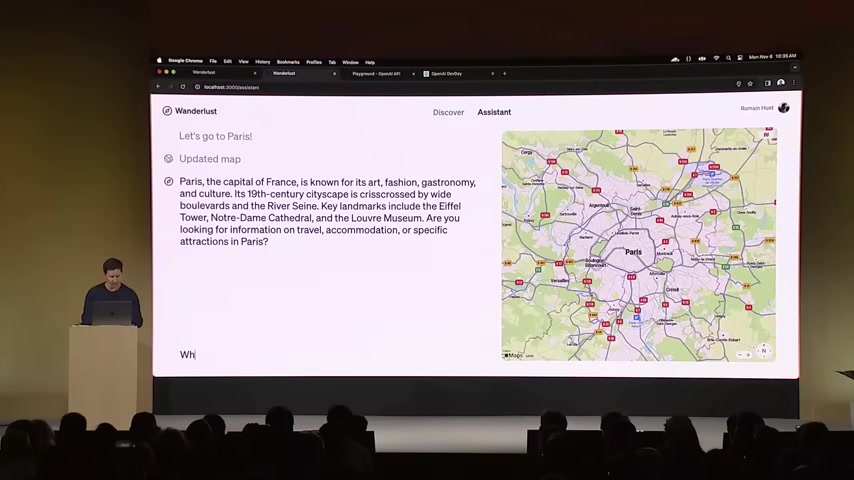

Imagine I'm building Wanderlust , the travel app for global explorers .

And this is the landing page .

I've actually used GP T four to come up with these destination ideas .

And for those of you , with the keen eye , these illustrations are generated programmatically using the new Dali three API available to all of you today .

So it's pretty remarkable .

But let's enhance this app by adding a very simple assistant to it .

This is the screen .

We're gonna come back to it in a 2nd .

1st , I'm gonna switch over to the new assistant playground .

Creating an assistant is easy .

You just give it a name , some initial instructions , a model .

In this case , I'll pick GP T four tubo and here , I'll also go ahead and select some tools , I'll turn on code interpreter and that's it .

Our assistant is ready to go .

If I say , hey , let's go to Paris .

All right .

That's it .

With just a few lines of code users can now have a very specialized assistance right inside the app .

So here , if I carry on and say , hey , what are the top 10 things to do ?

Would I have the assistant respond to that again and here , what's interesting is that the assistant knows about functions including those to annotate the map that you see on the right .

And so now all of these pins are dropping in real time here .

Yeah , it's pretty cool .

And that integration allows our natural language interface to interact fluidly with components and features of our app .

And it truly showcases now the harmony you can build between A I and UI where the assistant is actually taking action .

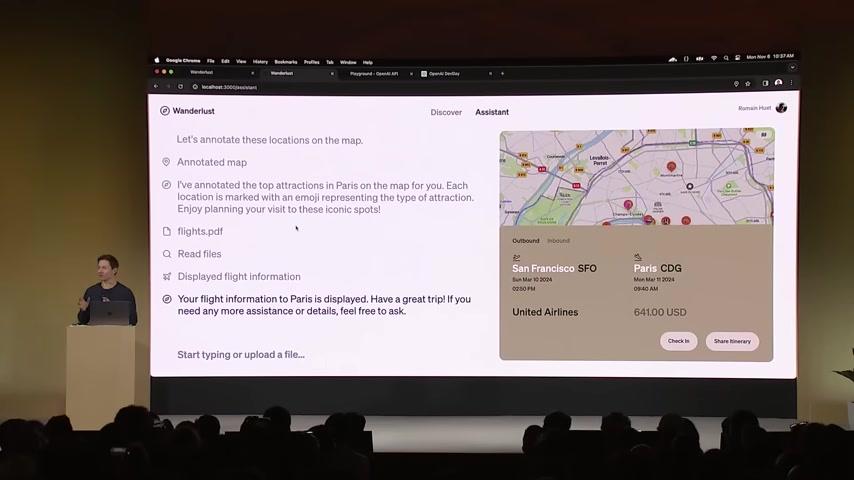

But next , let's talk about retrieval and retrieval is about giving our assistant more knowledge beyond these immediate user messages .

In fact , I got inspired and I already booked my tickets to Paris .

So I'm just going to drag and drop here this PDF , what it's including , I can just sneak peek at it very typical United flight ticket and behind the scene here , what's happening is that retrieval is reading these files and boom , the information about this PDF appeared on the screen and this is of course a very tiny but assistant can pass long form documents from extensive text to intricate product specs depending on what you're building .

In fact , I also booked an airbnb .

So I'm just going to drag that over to the conversation as well , but just because the PIA is managing the CP does not mean it's a black box .

In fact , you can see the steps that the tools are taking right inside your developer dashboard .

So here if I go ahead and click on threads .

This is the thread that I believe we are currently working on and see like these are all the steps including the functions being called with the right parameters and the pdfs I've just uploaded .

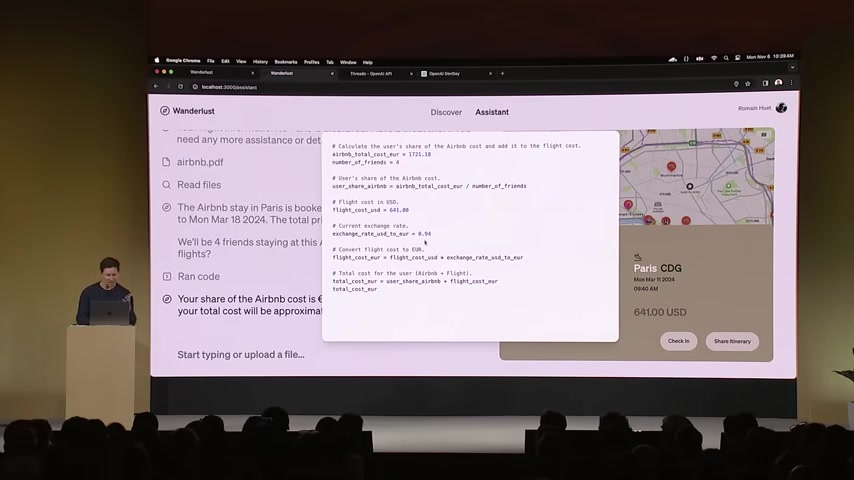

But let's move on to a new capability that many of you have been requesting for a while .

Code interpreter is now available today in the API as well .

That gives the A I the ability to write and execute code on the fly but even generate files .

So let's see that in action if I say here , hey , we will be four friends staying at this airbnb .

What's my share of it ?

Plus my flights now here , what's happening is that code interpreter noticed that it should write some code to answer this query .

So now it's computing the number of days in Paris , the number of friends .

It's also doing some exchange rate calculation behind the scene to get this answer for us , not the most complex math , but you get the picture .

Imagine you are building a very complex finance app that's crunching countless numbers plotting charts .

So really any task that you would normally tackle with code then good will work great for you .

All right .

I think my trip to Paris is sorted .

So to recap here , we have just seen how you can quickly create an assistant that manages state for your user conversations , leverages external tools like knowledge and retrieval and code interpreter and finally invokes your own functions to make things happen .

So , thank you so much .

Everyone .

Have a great day back to you , Sam .

Pretty cool , huh .

All right .

So that assistance API goes into beta today and we are super excited to see what you all do with it .

Anybody can enable it over time .

GP TS and assistance are precursors to agents are going to be able to do much , much more .

They'll gradually be able to plan and to perform more complex actions on your behalf .

We believe it's important for people to start building with and using these agents now to get a feel for what the world is gonna be like as they become more capable .

And as we've always done , we'll continue to update our systems based off of your feedback .

So we're super excited that we got to share all of this with you .

Today , we introduced GPT S custom versions of chat GPT that combine instructions , extended knowledge and actions .

We launched the assistance API to make it easier to build assisted experiences with your own apps .

These are our first steps towards A I agents and we will be increasing their capabilities over time .

We introduced a new GP T four turbo model that delivers improved function , calling knowledge , lowered pricing new modalities and more .

And we're deepening our partnership with Microsoft .

We do all of this because we believe that A I is going to be a technological and societal revolution .

It will change the world in many ways .

And we're happy to get to work on something that will empower all of you to build so much for all of us as intelligence gets integrated everywhere .

We will all have superpowers on demand .

We're excited to see what you all will do with this technology and to discover the new future that we're all going to architect together .

We hope that you'll come back next year .

What we launched today is going to look very quaint relative to what we're busy creating for you .

Now , thank you for all that you do .

Thank you for coming here today .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.