Load from PC

2023-08-24 07:00:44

Paavai Mavericks_MVP_Demo

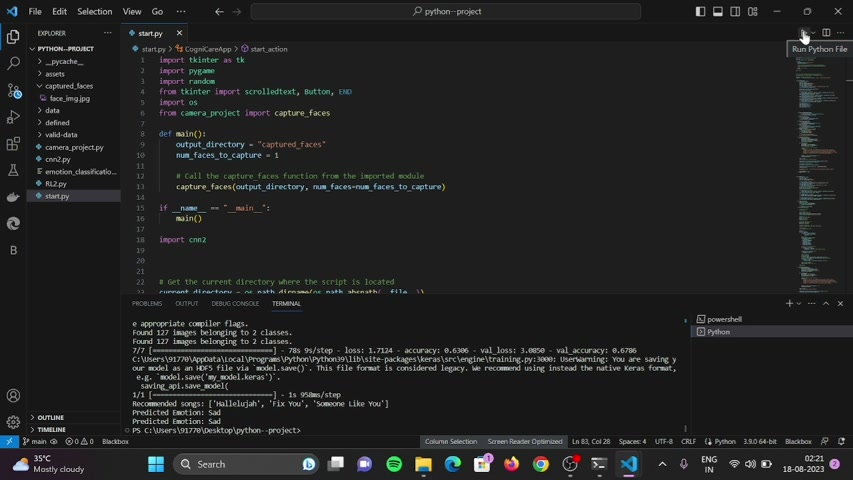

In our project .

Now we run the program and show the sample image of Down Syndrome Children .

Then the camera captures the image and this is the captured image .

Then the image was analyzed by the CNN model and the emotion of the Children is detected .

The detected emotion is passed due to oral model to give appropriate emotion layout .

When the app is started , the camera captures the face uncertain layout .

For appropriate emotion is displayed .

The child or individual can access any of the options like puzzles , live songs or live inactions , et cetera .

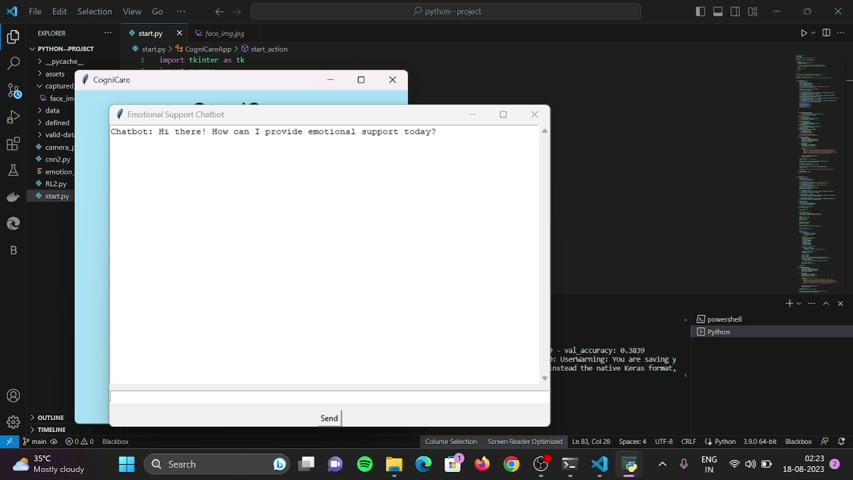

The chatbot can also be accessed by the individual .

Partnership

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.