https://www.youtube.com/watch?v=nr1uVYzI6eg

Mind-Blowing New AI Video Generator - Text to Video AND Image to Video with Pika Labs

A I text to video has taken a massive leap forward , not only in terms of quality , but also it's completely free for now , at least , and it's easy to use .

This is the most excited .

I've been about a new A I tool in a long time , but a long time , I mean a couple of months , but that's years in A I time .

So I'm talking about PKA Labs .

It's only been a couple of weeks since they launched and there have already been some incredible results and it's not just text to video .

They also released I to video , which has been a complete game changer , whether that's just to quickly animate a mid journey image or to guide and create an entire film .

So I want to show you how it works , generate in a few different styles and show what I've learned so far and also showcase the best creations I've seen .

Now , there's some other good text to video that's come out before this .

The biggest player has been runway ML .

You can definitely get some good stuff .

Here's one of the best creations I've seen by Nick Saint Pierre .

This my friend is life in its purest form , a dance of eons untamed and free yet perfectly choreographed .

One of the main issues with runway is cost , you get a set amount of credits each month that you can burn through extremely quick .

It gets very expensive , very fast to get something like this .

You need to spend a good chunk of change , but it's not just that Pika also has the best movement out of any model .

Yet with runway , you typically get simple camera movement and not that much movement from your subject .

But Pika has realistic movement for a wide variety of scenes and subjects or multiple characters or multiple types of movement like camera movement while your subject is moving and keeping it all relatively coherent .

Like this looks like just a real natural walking stride and same with running .

This looks great .

Of course , it's not perfect , but it's a big step forward there and also the ability to prompt with images is huge .

The other best text of video that came out recently was zero scope , which is amazing .

But the biggest difference again is that Pika has image prompting zeros scope is nice that it's completely open source .

We don't know what Pka labs was trained on or how it works .

You can use zero scope for free as well .

But if it's busy , it takes a long time or it sometimes doesn't work at all .

If you have the right hardware , you can duplicate the space and run it yourself for a few dollars an hour .

Then it takes about a minute to generate each video .

That's the average generation time with Pika for free .

I don't know how long it will be free .

I'm sure they'll move to some sort of paid model eventually .

But for now I'm loving it .

And there's other awesome tools like Ker neural frames , warp , fusion deform and a bunch of others .

But those all have a very particular look with that kind of flickering decoherence which I like in a lot of cases and will still be using those tools .

But Pika is able to get a lot smoother .

I want to showcase some of my favorites here .

The Door Brothers have been consistently creating just incredible stuff .

We'll start with this one because you may remember a bunch of A I generated food commercials .

People were posting from other text of video tools a little while back , people were posting them because of how weird they turned out .

So when you compare this quality with those from a couple of months ago , it's pretty mind blowing .

Here's another one from the Door Brothers that's in a van Gogh style .

That looks really cool .

Um It's a long one .

So I just spliced out a few parts .

Can you see everything I recommend ?

You go watch the full thing .

Honestly , I could just show everything they've been posting .

It was really hard to narrow it down .

They've been killing it .

I'll let you go follow them to check it all out .

Now , let's go to the opposite end with this more horror themed one by Winter Garden A I it's reimagining Hr Geiger works .

I'm assuming he used some of Geiger's art as image prompts or maybe he just asked it to generate in the style of Hr Geiger for some .

But with the music and editing this one is awesome .

This is just a short clip .

The full thing is two minutes long .

Then here's a documentary on ants by Impostor Chick .

There are very few creatures , the ants look amazing and they end up building more and more complex technology throughout the video .

Uh This one's great on youtube .

The channel is creativity risk .

She has another one on sea turtles and this New York flood by Dave Rayan is really well done with the story and the music leaning into the rain was a really good idea .

So the inconsistencies you get are a lot less noticeable .

That's a good move with all A I video right now leaning into scenes that naturally have a little more distortion or are more surreal or abstract .

Those end up just feeling better because your brain kind of expects them to be a little bit off .

Anyways , here's a short one by Mr Allen .

T that I assume a lot of time was put into .

He says the process was mid journey generative fill , then Pika and then some compiling and editing edit on top .

My guess is he started with a close up of the face , then used Zoom out in mid journey to zoom out to different scenes and then animated it with pika .

But it just goes to show what you can do .

If you get creative with all the new tools available , I could just keep going and going .

I had a lot of fun going through all these .

So I'll finish up the showcase with just a bunch of clips I put together from a lot of different people just to show off the range of what it can do .

So these first ones are all by Scotty Wick .

His Twitter is full of awesome examples like animating memes , all the Star Wars characters as babies .

And he did a full cyberpunk futurama trailer .

That's amazing .

Again , I cut a bunch of those clips short .

His and everyone's Twitters that I show will be linked down in the description more from the door brothers .

Seriously , so much good stuff on their Twitter .

I'll let more of these play to some music .

You can skip to the next section if you want to jump into the platform .

But personally , I love watching them and of course someone had to make a video of Elon and Zuck .

Here's them dancing .

This is one of the hardest things for A I to get , right .

But this is significant progress .

Mid journey had their one year anniversary recently and a lot of people were posting the progress over that year , the amount it's improved from looking like this to now being able to generate images that are indistinguishable from real photos .

We all in just one year .

Imagine where this will be in a year .

Of course , video has its own unique challenges , but there's a ton of research to build off now .

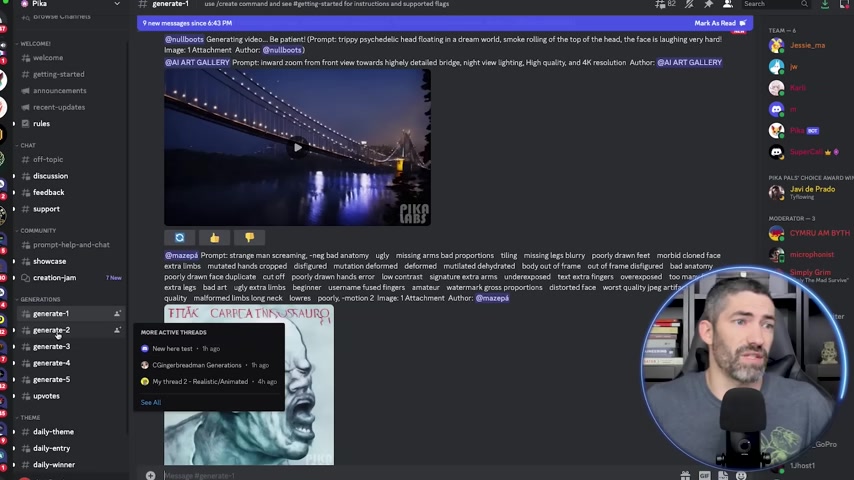

So let's jump into it just like mid journey .

This is all operated within Discord right now .

It's a closed beta , but you can go to Pika dot art and fill out a type form to get access .

They've been letting people in pretty quickly within a couple of days , they'll send you a link to join the Discord .

Then once you're in , it's set up similar to mid journey , you can generate in these rooms .

They have daily contests with different themes , helpful chats for discussions and support .

Really everyone is still just experimenting and figuring things out right now and sharing with each other what works ?

They have a getting started channel with the basic instructions which are all pretty simple .

So I'll just jump in and show you how it works .

Use the create command just forward slash create , then enter your prompt .

And the main parameter to use is aspect ratio .

You only use one dash instead of two like mid journey .

So dash A R 16 9 then let it generate .

The other parameters are guidance scale for how related .

The image will be to the next nag for negative prompting seed for more consistent generations and motion for how likely you are to get motion .

They're all in this getting started channel for reference and just like that our video is done and you can just click this button to rerun the generation to get some different results .

I'll usually test out prompts one at a time to make sure the prompt is good .

Then I'll have it generate a few more and pick the best one .

But just from a super simple prompt and some music from Google LM .

We get this .

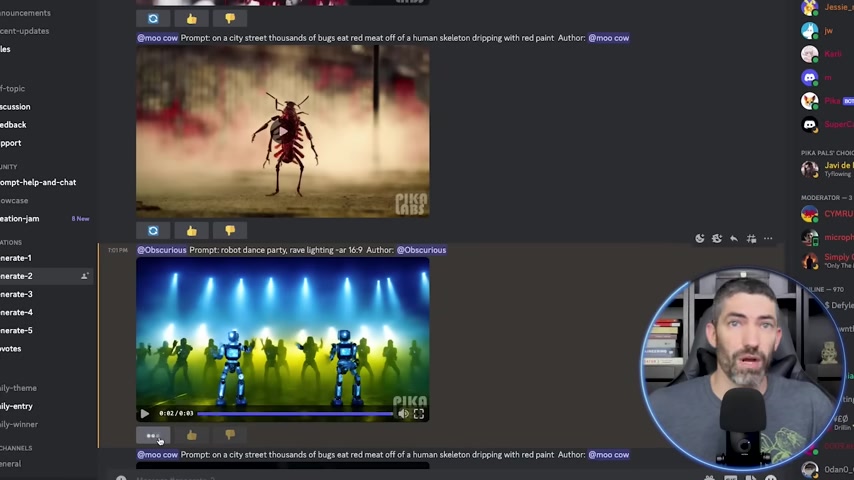

So I'll do a couple more examples here before we jump into image prompts gets a little chaotic in here and hard to keep track of .

So what I've been doing is come up to the top , right and then click create thread , then click these three dots up here and select open in full view .

Then you'll essentially have your own little room to generate in .

Let's make it quick wildlife documentary .

So I asked chat GP T to write me a script about the diversity of wildlife and ecosystems around the world to be narrated by David Attenborough .

Had a little bit of back and forth to get it short and concise .

Then generated the voice over in 11 labs and created some scenes to go along with it .

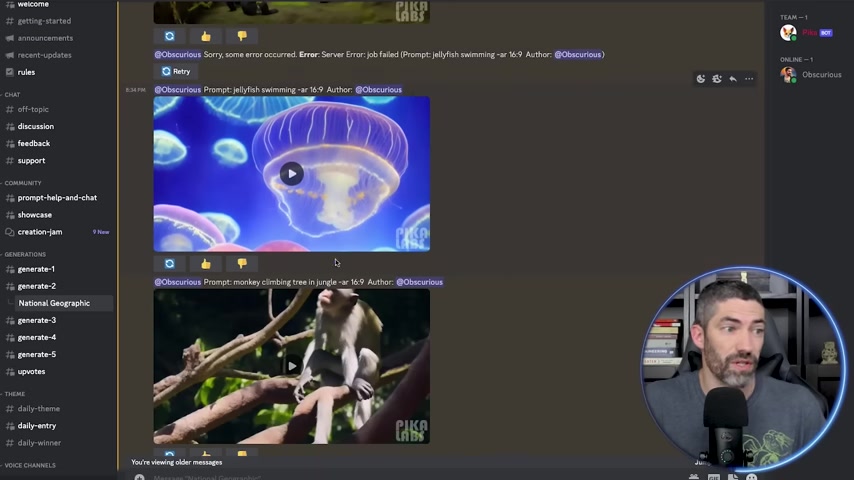

I used simple prompts for the whole thing sometimes as simple as just jellyfish swimming or tiger stalking in a jungle for the landscape shots .

I used aerial shot and time lapse .

Nothing complicated at all .

But I did generate each of them quite a few times .

Then picked the best ones , then added some music and synced it up a little bit .

I went through it pretty quickly .

But here's the result .

Our planet .

A kaleidoscope of ecosystems demonstrates life's incredible adaptability .

The Arctic is a vast canvas of serene white .

A testament to life's resilience amidst extremes .

The African Savannahs with their endless golden tapestry reflect the grand scale of existence amidst the emerald chaos of rainforests .

Life thrives in every inch of this vibrant labyrinth , the oceans , those endless blues house , an intricate aquatic metropolis pulsating with life from the polar ice to the tropics , from the ocean depths to the highest peaks .

Our world embodies the profound diversity of life in its endless forms .

So I think text prompts are really fun because you never know what's going to happen .

But if you have a vision you want to stick to and something specific in mind , image prompts are the way to go .

So with image prompting your first frame will look very similar to your reference , image making generations in mid journey .

And using those as your prompt is a great way to get consistent aesthetics or just to get your scene closer to the way you want it since you have so much control in mid journey .

So you just type your prompt .

Um What I've found .

Yes .

The best results is to prompt with what you want to be moving in your scene , then click the plus one to add your image or you can hit tab three times , then upload the image and submit and this looks awesome .

So with image prompting , you get all the limitless styles and scenes you have available in mid journey and then some control over the animation within your scene .

It usually takes a few generations to get it to do what you want .

But I've been getting some great results and all this has been way easier to pick up than any other texted video .

I've tried .

The generations are only three seconds right now .

They announced that they'll be moving that up to five seconds soon and the quality isn't too high yet .

A lot of people have been using zero scope to upscale their videos where you can use something like Topaz A I to upscale .

There's some cheaper alternatives out there too like hip paw or I know there's free ones .

I haven't tested very many out but zeros is a great way to go .

I've just started my experimenting .

I'll be doing much deeper dives into different techniques and ways to control the results .

I'll be posting some of that on Twitter .

I may make another video with a more in depth tutorial if it seems like people want it .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.