https://www.youtube.com/watch?v=Mrpu7BIes7c&ab_channel=areyou1or0

AWS Cloud Security Series v6 - CloudGoat Part 4 (ELB,EC2,S3,RDS,IAM)

Hey , everyone , what's up a one or zero here ?

So today , we're going to cover another scenario from cloud coach .

Um This is part of a series that I'm creating for cloud security on Aws .

So this scenario is called R ce V app .

And there are two different exploitation path for this one because in the scenario , there are different resources like ELB EC2 , different buckets R DS and scenario starts with two different IM users , Laura and Mac .

We can go through any of these to finalize or to reach out to our goal .

Um And they are having very similar scenarios .

So as a summary , um if we start with the user , Lara , we're gonna find a load balancer and three bucket .

Um we will check for vulnerabilities and we will find an R ce exploited on a web application , we will find some confidential files .

Um And then we will reach out to um highly secure R DS database instance .

Alternatively , we can start with Mac user and we will find some SRA buckets .

Um We will find from there some SSH keys , private and public key .

Then we will get into an easy two instance from there .

Um We will find database credentials um and connect to a database .

So it's quite cool in general teaching you so many different services and different exploitation steps .

If you're ready , let's jump right in .

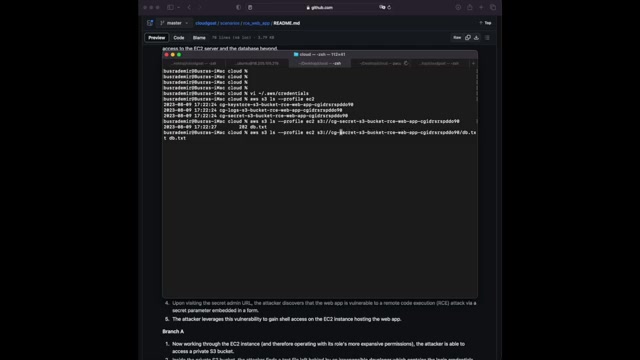

So as always , I'm started with the , I've started with the initiation and of course , it created some access key and um for the secret key for both of the users .

Uh Laura and M do uh I just added to my aws um configuration file .

You can just use it like this as well by creating a profile , aws configure dash dash profile and a profile name that you want to create .

And it's gonna ask for access key and secret key just type it there .

So the so the profiles would be ready for us to use and um do the further exploitation steps .

As you can see , I I just edit both of the profiles with the following comments .

It was configured hash profile and specify the rest .

Of course .

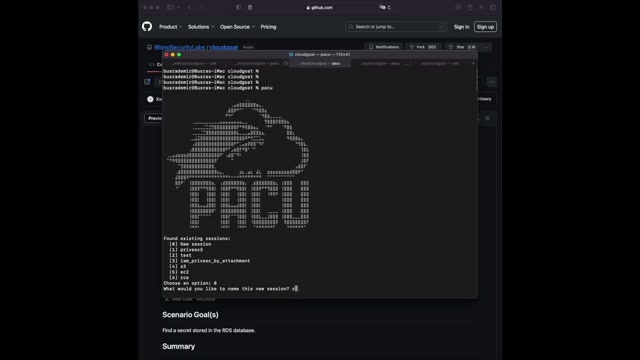

The next step generally for me is um running an an tool .

I'm using PAU which is another tool by Reno security .

Super easy to use .

Uh You're just typing import keys , comment .

Um And I'm importing the keys for Lara profile and then I'm running the following comment , run I am brute force permission .

There are other options as well .

These are just my go to comments , right ?

When we run this , we will see following a lot of permissions on different services .

For instance , I've seen EC2 describe instances will be one of the um comments that will be useful .

There's I can get list um et cetera object .

So this will be useful other services .

So let's do the same um action on the second user as well .

We're going to start with the first user , but I just want to show you that the permissions for both of the users are the same um or very similar .

So this is just something to show here because in the previous videos , I just said that I run pau and show the comments , but I did not show the output .

So because it takes a while and I didn't want to edit the video , but this time I did .

So um as you can see the permissions for the second user , it's very similar to the first one .

So good to know .

Um We will do some actions on ECT and S3 according to the outcome output from very cool .

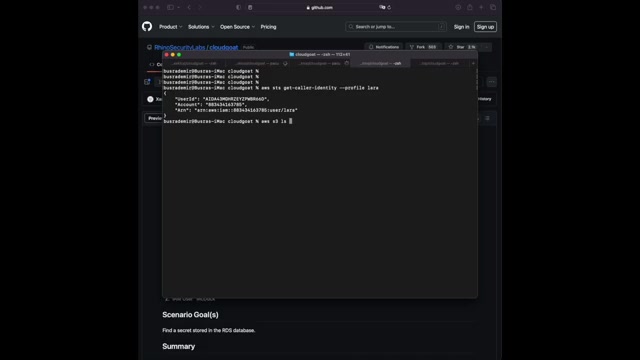

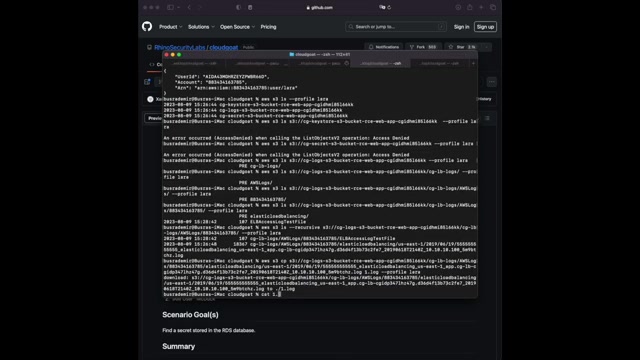

So let start with the first user Lara as we enumerated the permissions enough .

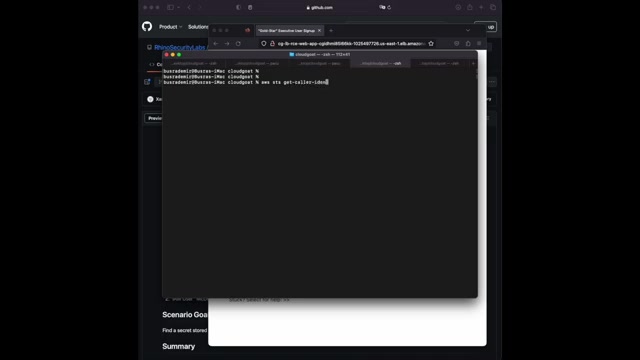

Uh Since I can um yeah , first , since we have this user , we can of course verify the users um user name and account ID with the following common SDS , get caller identity .

Um After that , since I know that I can list the buckets um and get the content of it , I can just run the following comment , aws S3 Ls give the profile name to see all the bucket names .

Um It's not necessarily that we have access to or we can see the content of the buckets , but we're gonna try it .

Of course .

Um That's the following um , syntax , street slash slash bucket name .

And then the right profile name , as you can see , we don't have access on this bucket .

Let's try another one .

Um The SA one , it's also not allowed .

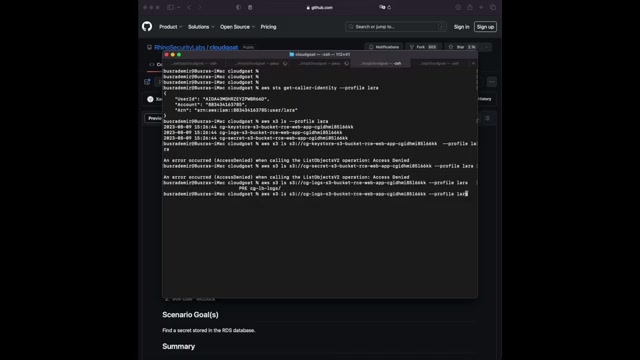

So the only thing that we are having permission to is CG dash los and conditions like that and it's going like that .

So I am just typing this down in the same syntax giving the profile for it .

It will give me a bunch of um folders , directories under the main bucket .

So we're just going to follow it down .

We can just write the comment dash dash recursive .

Um If you want to see all the files under it , I'm just like shoving two different options here .

Um in case you're not familiar with it .

So we're just seeing one by one , there are different folders .

I mean , in the realistic scenario that may be that there will be some in different folders .

So in different files , so definitely makes sense to run recursive to see everything in a map basically .

And from there , of course , you can um you can check what you want to read , what you want to copy , etcetera .

Yes , and I'm showing here the recursive option in case you're interested So let's see , dash dash recursive to show what kind of uh we do .

We have perfect .

It shows the folder name and then the um sub folders and the file name .

So this one looks interesting .

That's a log file .

Maybe I can grab some or maybe EC2 instances , um some information like that just copying it with the S3 CP comment and I'm reading the file as you can see .

Um There are some URL S we are seen in here , some end points .

I've seen HTML CS S uh html .

Seems interesting .

Maybe I can use that one .

Um Let's see , what else do we see in the file content ?

We're seeing elastic load balancing .

That's good to know .

We're seeing some HCT pr UL some html endpoint , the CS S end point , good to know .

Cool .

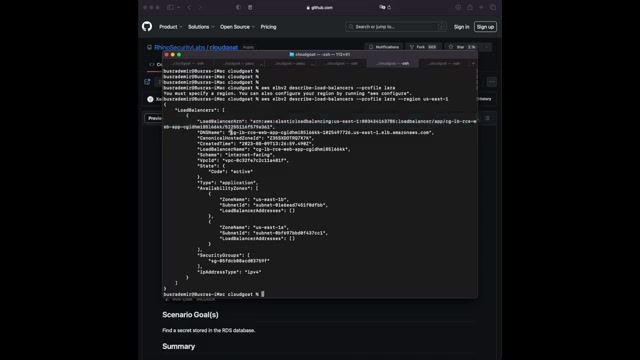

Uh The next thing I'm gonna do before I check it in my browser is I'm gonna check with the ELBV two comment for um describe load balancers .

Of course , I need to attach the profile to see like the load balancers , um information , the um all the DNS name and everything .

You can see the , er , the DNS name um for the load balancer .

Of course , we can go for the DNS name to check on the browser and as you can see , we're seeing some website here .

Uh for more information , of course , we can go to that html endpoint that we've seen in the log logs and once we go there , uh we are finding a comment option here , then we can run our comments for instance , who am I ?

It's basically allowing us to run comments on the server .

Um And the classic um unique syntax like with the common ID .

Um Another comment , it basically runs it for us , separates the comments .

That's great .

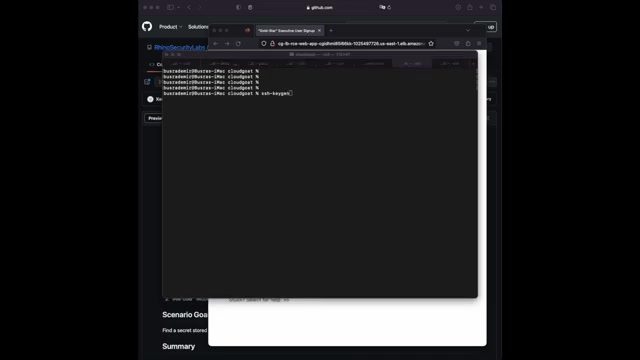

What we can do is uh in my um in my Mac , I'm just going to create a H keys SSH key .

Um For this specific example , we are also specifying it with the ED 25519 .

And we're creating it like that .

And what I'm gonna do is I'm going to read the public key and I'm gonna attach this public key um to this to this um to the server um to the service authorized keys file so that um I can connect to it later using my private key .

So I'm just gonna write um comments , echo and then the public key and then add this to the U two authorized keys file .

You can also verify the file if it's written properly .

I mean , it's given the green um color , but I mean , of course , it's better to just read it like that , not assuming that it's um error free .

Uh Let's also check the public IP address of this .

If you write Curl in dot me , it will give it to us .

And what we can do is we can go to that directory uh of of my current users home folder .

Um And we will go to dot S we're gonna write the um currently created um private key .

The U is like the default user for U two servers .

Um We're gonna write the IP address here for this easy to instance , say yes and you're connecting directly as our authorized keys file is in the easy to instance U to uh users authorized keys file .

Cool .

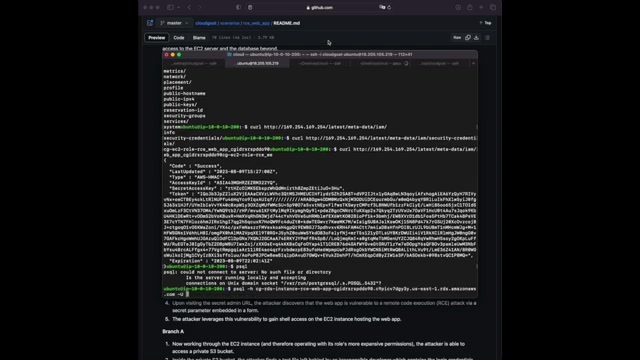

Next steps as always , um We need to check for the meta data api information um using the following curl comment , the same classic IP address that you always write .

Um And the endpoints latest from there , you can check for metadata , user data .

I'll start with the user data which will give me um some comments uh like a comment history , basically like PS and you'll see basically the full um credentials and everything here .

That's one way to do it .

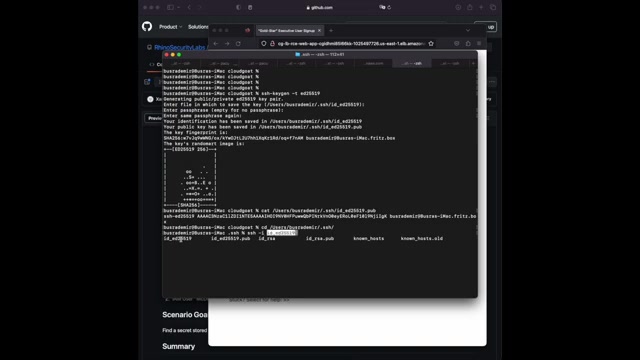

The other way to reach out to our goal is to start with the Mac user .

Um And we are doing the exact same steps .

First checking with the sts , get coder identity to see the account id and the user name to see everything is good and the permissions are very similar to the first user .

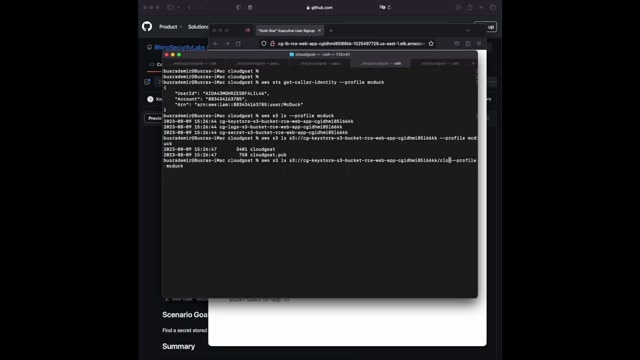

So we're gonna check for the buckets .

We're going to see which buckets content are .

Uh we have the permission to see .

Um We're gonna check one by one , which one , which one we can see the same syntax as three slash slash the bucket name .

Let's write them down and see if it's gonna work .

We're starting with the key store .

Let's see if it's working .

Yes , we , uh , it does work .

We can , we do have a , uh , permission on it .

So , what we're gonna do is we're gonna copy both of the files .

It seems like this is a public and private .

This is public and private keys .

It seems like .

And of course you're going to use AWS S3 CP , which stands for copied comments .

Cool .

Let's save it to our current working directory .

Um And let's also copy the public key file .

I'm assuming um , based on the file extension pop , the others are uh DD are private and public key , but we will see the file content .

Of course .

Yes , it's a private key and this is a public key as it seems very cool .

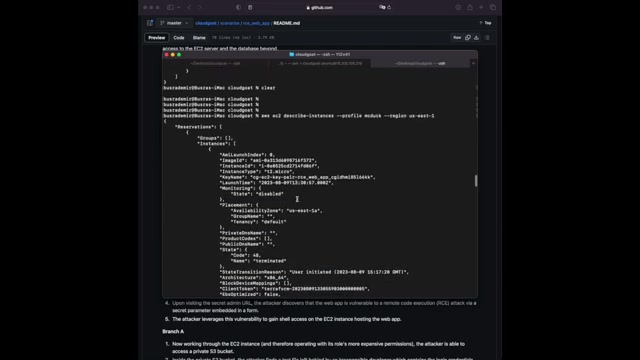

What we can do here is first we can check for , it's easy to describe instances for running instances so that we can actually find the , um , the public IP address for ECG that's in the running state .

We're finding it in here .

Public IP address .

That's very cool .

Then since I have the public and private key for this , um for this instance , I can just connect it like that since I also know the public IP address .

That's very cool .

Uh It's very similar to the previous example as well .

With the Lara user and from there , I will do the exact same thing with the curl lass .

Uh We're gonna check for meta data api um Let's continue with the metadata endpoint .

Let's see .

Of course , we are going with , I am , what I'm looking for is basically security credentials .

Let's copy that as well .

And we're seeing a role here .

We're also copying and pasting that to see the credentials .

Perfect .

So as you know , we are gonna add it to our um credentials files , uh just like that and create a profile um from that profile .

Of course , we can run again to see the um see the results .

What kind of um permissions do we have on this E two instance profile ?

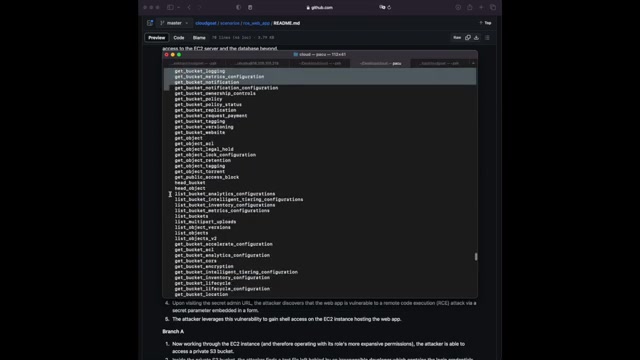

Let's have a quick look as you can see there are different permissions that we have on EC2 S3 .

Uh we can get list objects .

Um Many , many permissions that we have .

Good to know .

Let's continue based on the permissions that we have .

So now we can check for buckets again .

Maybe we have more access using this profile .

So let's attach the profile and see the buckets and let's try to see on the content of these buckets .

Let's start with the secret one .

It looks juicy , maybe they are cool information and yeah , we can list it .

There's a DB that takes the file , let's save it to our current working directory and read the file content .

Of course , what we're going to do is we need to use the comment CP instead of LS as um we're trying to copy the file , it worked so we can just read the file .

And as you can see the DB name , user name , password um completely against the best practices .

But this is AC TF So here we are .

So what we're gonna do is since we are connected to the ECT instance , we can use this to connect to the um database .

We're going to use P SQL uh for the post , post connection .

Um um For that , we also need to check um information on the DB DB instances .

Um Of course , we're gonna use the R DS comment for that and then describe DB instances very similar to the EC2 1 that we've done so far .

Um And we need to check the DB um databases in this way .

Uh Let's see , what do we have here for A N um TV name .

Here we go DB instance , identifier , the address .

So the address should work for me .

I'm going to copy that and write it in here .

Then user name and the database name .

I also need to add dash U the user name or was that DB dot Takes your file ?

Here we go CG admin and then the database needs to be defined as well .

Dash D .

Uh Let's write this down and it will automatically prompt us for a password .

We already have it as well , we'll just copy and paste that one as well .

Perfect , we're connected .

So we can look for databases , the tables .

Uh we can look for tables um under this database .

And as you can see , we find one code sensitive information , you know , the drill select all from uh table name .

What do we have , we have the super secret passcode and value .

So at CTF , so we found the values just like that in a realistic um real real world scenario .

Probably it's not going to be like that , but still you can um extract a lot of information , expose data , et cetera , right ?

So it was not such a long video but it was such a cool um exercise and cloud code .

I don't think it was really hard .

I think it was just long and it requires you to know different services .

Uh We focus a lot of EC2 SS S3 or IM and the previous exercises .

So this one also involves a bit more like R DS and load balances , et cetera .

So I think it was a cool one .

So , right .

So this video ends just like that , drop a comment and let me know um if you want to see specific content in the next video .

Cheers guys .

Are you looking for a way to reach a wider audience and get more views on your videos?

Our innovative video to text transcribing service can help you do just that.

We provide accurate transcriptions of your videos along with visual content that will help you attract new viewers and keep them engaged. Plus, our data analytics and ad campaign tools can help you monetize your content and maximize your revenue.

Let's partner up and take your video content to the next level!

Contact us today to learn more.